Content Moderation AI: Importance Explained

Content moderation is a process designed to keep our online communities safe, inclusive and accessible to all.

With users consuming and generating content in ever-increasing quantities, the need for automated content moderation is paramount.

As with many industries, artificial intelligence is leading the way in offering efficient and scalable content moderation solutions to solve the challenges that prove too resource-consuming for manual labor to carry out successfully.

What is content moderation AI?

Content moderation involves the filtering and removal of content that is deemed harmful or hateful.

By utilizing AI solutions to perform the moderation, communities can be kept safer as the AI tools are often more scalable and real time compared to traditional manual methods involving moderators.

Why is AI content moderation necessary?

The rapid growth of online platforms has brought with it a surge in user-generated and distributed content across social media, forums and other digital spaces.

While this digital expansion has helped to enrich the online experience for many, it has also given rise to issues such as hate speech, harassment and the spread of harmful material online.

Content moderation AI is one tool being used by digital admins and developers to try and mitigate these issues and foster a safe and welcoming digital environment for all ages and backgrounds.

Traditional content moderation often involved implementing profanity filters for text-based content, whilst relying upon human moderators manually reviewing image or video based content. This approach had many drawbacks, including but not limited to:

- Profanity filters struggled to understand the nuances behind the use of certain language

- The emotional toll that the harmful content had on the moderators

- Not a scalable solution for growing digital platforms focusing on user-generated content

- A manual process is slow and cumbersome, which could allow harmful content to be spread far and wide before it is reviewed and removed

- Often relied upon users reporting content before it was reviewed

AI-powered content moderation addressed many of the limitations of the traditional methods used by leveraging advancements in machine learning models.

These algorithms enabled platforms to automate the detection and removal of inappropriate content, improving the efficiency and reducing response times.

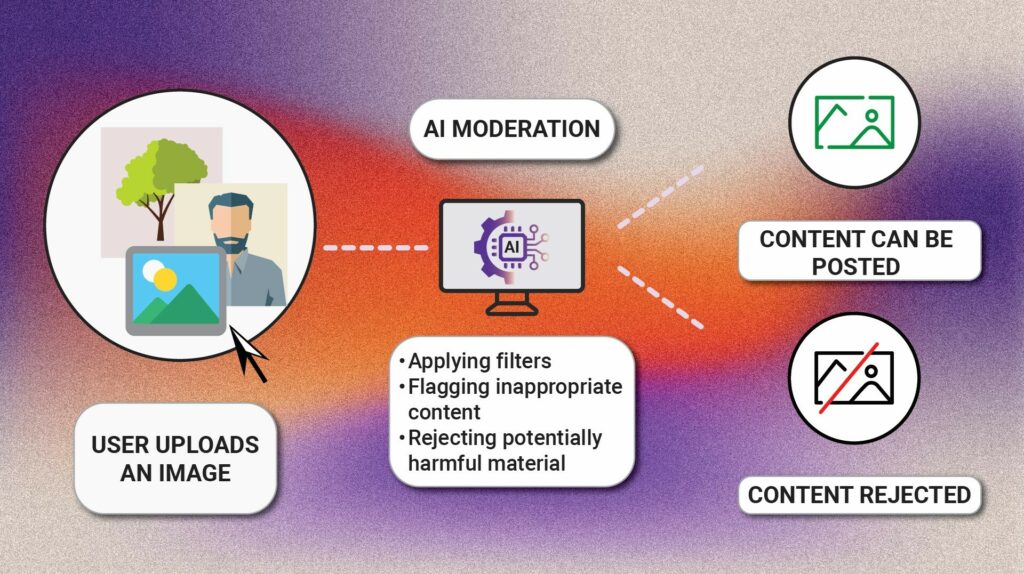

Before any post is made public, it could be scanned by a content moderation AI tool to ensure it meets the community guidelines.

For text-based content moderation, advancements in natural language processing and sentiment analysis have enabled content moderation tools to begin to understand context and nuances.

This had long been a weakness of simple profanity filters, which struggled to determine the difference between hate speech and attempts to discuss and challenge hate speech.

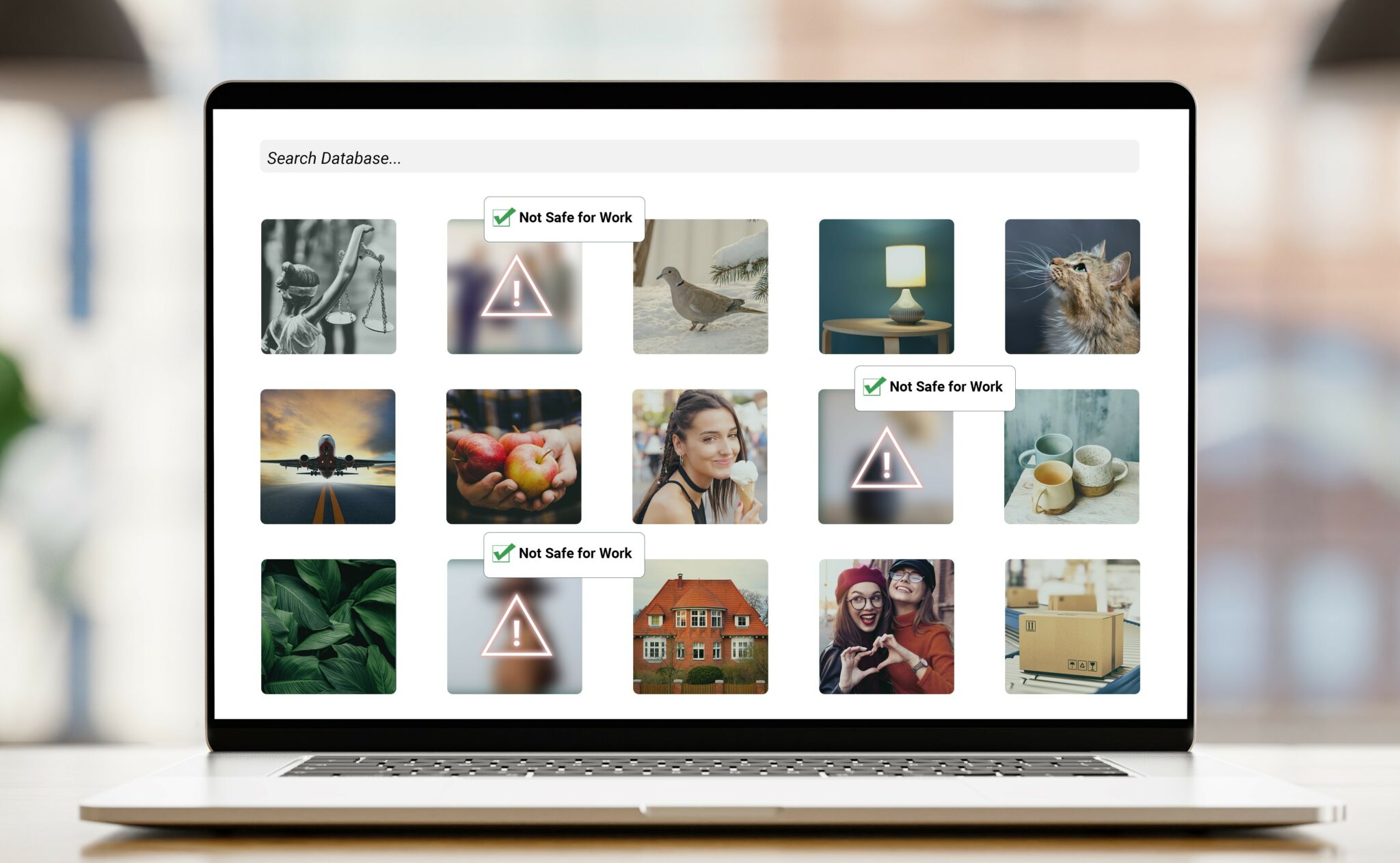

Image Moderation

Just as advancements in artificial intelligence have revolutionized text-based content moderation, developments in computer vision have transformed visual-content moderation.

Computer vision powered content moderation systems enable platforms to accurately sort through and remove illegal, obscene, explicit or irrelevant content on a large, automated scale with no detriment to the efficiency or accuracy of the moderation.

How does image content moderation AI work?

AI-powered content moderation tools often use pre-trained image recognition models.

Pre-trained models are AI solutions that have already been trained on a large dataset to solve a general problem so they can be used out-the-box without any custom coding or data labeling required.

Pre-trained content moderation tools will have been trained on a large dataset that included harmful content.

This content would have been labeled, so that the AI model could learn to classify this type of content.

Once the model has been trained to identify this type of content, the model can be used to make predictions on future images as to whether the harmful content is present within the uploaded image.

NSFW model

One type of pre-trained model used for content moderation is an NSFW Classification model, that can detect whether an image contains nudity or not.

As this type of pre-trained model is a classification model, a predicted score percentage will be provided based on how confident the model is that the image contains nudity.

This percentage score can be used to set a threshold of approved or rejected images.

Classification and object detection models

Other types of computer vision models that can be used for content moderation include classification and object detection models.

Both classification and object detection models identify the content of an image, however the latter model can also locate the object within the image.

A pre-trained general classification or object detection model would be able to identify general items such as a weapon, however should the content moderation need to identify more specialist items within images, it is likely that the model would need to be custom trained.

Training a content moderation model using AI

Whereas once training an AI model would seem a daunting task, now it is easy to train your own content moderation model using SentiSight.ai.

If your content moderation tool requires an image classification model, you can train your own by:

- Creating an account on SentiSight.ai

- Create a dataset by uploading images

- To train your image classification model, all you need to do is click a single button! More advanced users can set a range of advanced parameters to tailor the model to your exact requirements

- Once the model has been trained, you can use the model to start making predictions with the web-based interface, via REST API or via an on-premise solution.

If your content moderation tool requires an object detection model, follow these steps to train your own:

- Create an account on SentiSight.ai

- Create your dataset by uploading images

- Label the objects of interest with a bounding box or any other tool within our user friendly range

- Start training your model using SentiSight.ai’s simple interface – more advanced users can set a range of advanced parameters to tailor the model

- Once the model is trained, you can start using it to make predictions

Does your content moderation tool need a classification or object detection model?

Classification models will focus upon assigning a label to an image, whereas object detection will also locate the object. When choosing between either a classification or object detection model, consider the following:

Localization requirements

Do you need to understand the location of an object within an image? If so, you will need object detection.

Multiple objects

If there are multiple objects of interest within an image which need to be labeled separately, object detection is more suitable.

Overlapping objects

If objects are occluding one another, object detection models can still provide unique labels.

Simplicity and Speed

Classification models can be simpler to implement and operate

Using a SentiSight.ai pre-trained model for Content Moderation

The SentiSight.ai platform offers a range of pre-trained models that have been designed to perform general tasks to a high degree of accuracy.

Three of the pre-trained models available on SentiSight.ai offer great versatility and applicability for the use of content moderation, these being the NSFW Classification, General Classification and General Object Detection.

You can try these tools for yourself free of charge on the SentiSight.ai platform, all you need to do is register for an account!

To use one of the SentiSight.ai pre-trained models on our online platform, simply:

- Click on the ‘Pre-trained models’ section on the dropdown menu

- Choose one of the models from the list

- Perform predictions using your images

Should you wish to use a pre-trained model via either REST API or to use the model offline, please follow the instructions in our user guide.