AI2’s Olmo 2 1B redefines small language model performance.

Smaller language models are carving out their niche where everything was dominated by large language models (LLMs). The nonprofit AI research institute AI2 has entered this arena with Olmo 2 1B, a compact yet powerful model that’s challenging industry giants. Released under the permissive Apache 2.0 license, this billion-parameter model delivers impressive capabilities while maintaining accessibility for developers with modest computing resources.

Breaking Benchmarks Despite Its Size

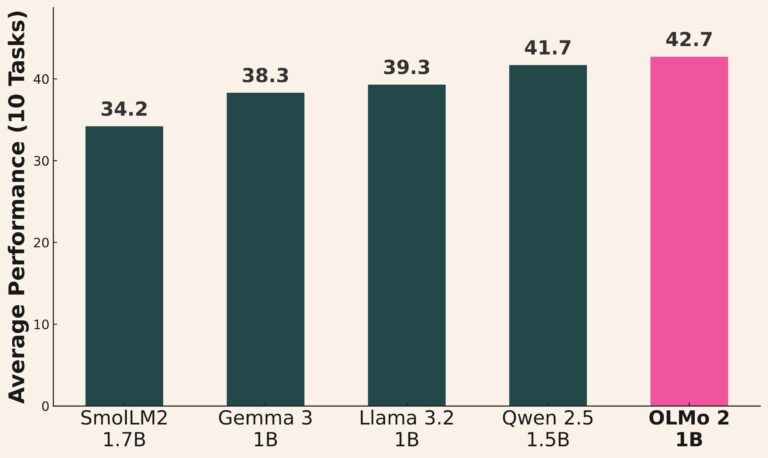

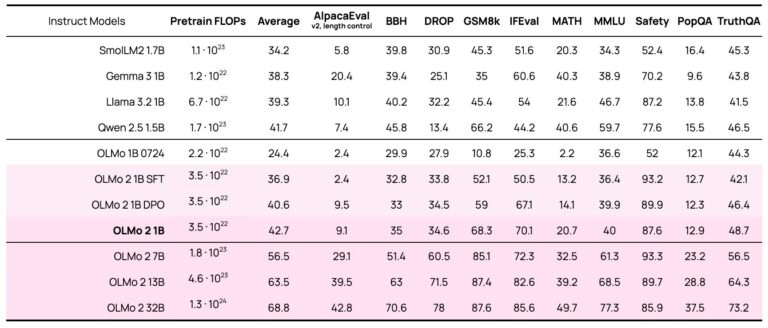

What sets Olmo 2 1B apart is its remarkable performance compared to similarly-sized models from major tech corporations. Despite its compact architecture, AI2’s new offering outperforms Google’s Gemma 3 1B, Meta’s Llama 3.2 1B, and Alibaba’s Qwen 2.5 1.5B on critical benchmarks.

The model particularly excels in arithmetic reasoning, scoring higher on the GSM8K benchmark than its competitors from tech giants. More impressively, Olmo 2 1B demonstrates superior factual accuracy on the TruthfulQA benchmark, suggesting AI2 has made significant strides in reducing hallucinations and improving knowledge reliability within constrained parameter counts.

Unlike most language models shrouded in proprietary development processes, Olmo 2 1B stands out for its transparency. AI2 has released not just the model, but also the complete training infrastructure including code and datasets (Olmo-mix-1124 and Dolmino-mix-1124) used in its development. This unprecedented level of openness allows researchers and developers to replicate the model from scratch, fostering greater understanding and innovation in the field.

Massive Training on Diverse Data

Behind Olmo 2 1B’s impressive capabilities lies an extensive training regimen. The model was trained on a massive dataset comprising 4 trillion tokens drawn from various sources:

- Publicly available text

- AI-generated content

- Manually created materials

This diverse training approach—roughly equivalent to ingesting 3 billion pages of text—has equipped the model with broad knowledge and reasoning capabilities despite its relatively small size.

Accessibility: Running Advanced AI on Everyday Hardware

The practical advantage of Olmo 2 1B’s architecture becomes clear when considering deployment scenarios. While large language models typically require specialized computing infrastructure, Olmo 2 1B can operate effectively on consumer-grade hardware. This accessibility makes advanced AI capabilities available to developers and hobbyists working with:

- Modern laptops

- Mobile devices

- Lower-end computing systems

This democratization of AI technology arrives amid a wave of small model releases from major players, including Microsoft’s Phi 4 reasoning family and Qwen’s 2.5 Omni 3B.

Important Limitations and Use Considerations

Despite its impressive capabilities, AI2 acknowledges Olmo 2 1B’s limitations. The organization has issued clear warnings about potential risks inherent to the technology. Like all AI models, Olmo 2 1B may produce:

- Factually inaccurate statements

- Potentially harmful content

- Outputs touching on sensitive topics

Given these concerns, AI2 specifically recommends against deploying the model in commercial applications. This ethical approach to model release emphasizes responsible AI development even as performance boundaries are pushed forward.

Future Implications for Small Language Models

By demonstrating that smaller models can achieve competitive performance when properly designed and trained, AI2 challenges the assumption that ever-larger models are the only path forward for AI advancement.

For developers with limited resources or specific deployment constraints, models like Olmo 2 1B represent an attractive balance between capability and practicality. Today, research continues to improve small model performance, and due to this fact, we may see new and more complex AI applications emerge on everyday devices rather than remaining confined to specialized cloud infrastructure.

The complete availability of Olmo 2 1B on Hugging Face under an open license ensures that this technological advancement remains accessible to the wider AI community.

If you are interested in this topic, we suggest you check our articles:

- Content Moderation AI: Importance Explained

- Creative Social Media Content with AI Editing

- TextCortex: Improve your Content Writing Courtesy of AI

Sources: TechCrunch

Written by Alius Noreika