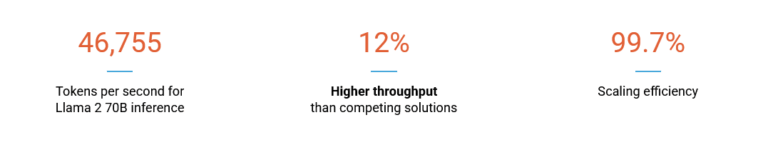

SuperNODE is a revolutionary single-node AI supercomputer that connects up to 32 GPUs with the performance characteristics of physically integrated accelerators, delivering 46,755 tokens per second for Llama 2 70B inference while maintaining 99.7% scaling efficiency. This breakthrough platform eliminates traditional multi-server complexity, reduces infrastructure costs by 30%, and accelerates AI development through seamless GPU composability powered by GigaIO’s FabreX memory fabric technology.

Artificial intelligence development faces a fundamental infrastructure bottleneck. Traditional server architectures force AI researchers and enterprises to distribute GPU resources across multiple nodes, creating network latency issues, complex software requirements, and significant performance degradation.

SuperNODE addresses these challenges by reimagining how accelerated computing systems connect and scale, offering a transformative approach to AI infrastructure that maintains simplicity while delivering unprecedented computational power.

Revolutionary Architecture Transforms AI Computing Limitations

Modern AI workloads, particularly large language models and generative AI applications, demand computational resources that exceed conventional server boundaries. Standard configurations typically limit organizations to eight GPUs per server, forcing complex multi-node deployments with inherent performance penalties.

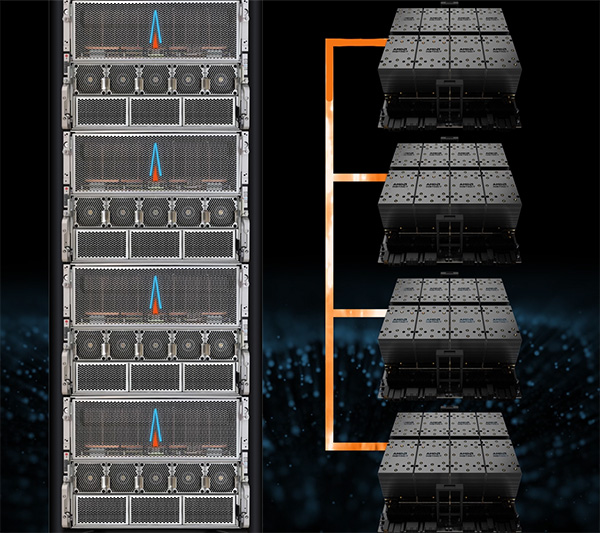

SuperNODE breaks through these constraints by creating the world’s first 32-GPU single-node configuration. This achievement stems from GigaIO’s FabreX dynamic memory fabric, which enables accelerators to communicate as if they resided within a single server chassis while maintaining native PCIe performance characteristics.

The system supports both AMD Instinct MI300X and NVIDIA accelerators, providing organizations with vendor flexibility while eliminating lock-in concerns. Each GPU maintains full bandwidth access to system memory and peer devices, creating a unified compute environment that dramatically simplifies software development and deployment processes.

FabreX Memory Fabric: The Technical Foundation

The core innovation enabling SuperNODE’s capabilities lies in FabreX’s approach to PCIe networking. Unlike traditional interconnect solutions that require protocol conversion and introduce latency overhead, FabreX creates a routable PCIe network using Non-Transparent Bridging (NTB) technology.

This architecture virtualizes hardware resources within a 64-bit address space, enabling direct memory read and write operations between any connected components. The system treats GPUs, FPGAs, storage devices, and even CPUs as memory resources, allowing seamless composition and reconfiguration without software modifications.

FabreX eliminates bounce buffers and protocol conversion overhead that plague alternative solutions. The memory-semantic approach ensures that applications experience identical performance whether accessing local or remote accelerators, maintaining the programming simplicity that developers expect from single-node systems.

Unprecedented Performance Metrics Drive AI Acceleration

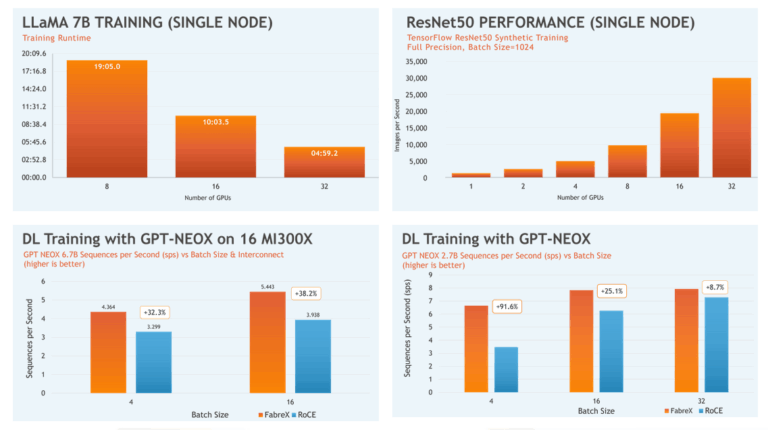

Performance benchmarks demonstrate SuperNODE’s exceptional capabilities across diverse AI workloads. The platform achieves 46,755 tokens per second for Llama 2 70B inference, representing a 12% improvement over competing solutions while maintaining energy efficiency advantages.

Scaling efficiency tests reveal SuperNODE’s architectural superiority. HPL-MxP workloads demonstrate 99.7% of theoretical scaling performance, contrasting sharply with traditional multi-node deployments that typically achieve 50% efficiency due to network communication overhead.

Training workload performance shows equally impressive results. Llama 7B training time reduces from 19 minutes on standard eight-GPU configurations to under five minutes on 32-GPU SuperNODE deployments. ResNet-50 performance scales linearly as additional GPUs join the single-node configuration, maintaining predictable performance characteristics that simplify capacity planning.

Operational Flexibility Through Beast, Freestyle, and Swarm Modes

SuperNODE’s composability extends beyond pure performance to operational flexibility. The platform operates in three distinct modes, each optimized for different use cases and organizational requirements.

Beast Mode concentrates all 32 accelerators on a single workload, delivering maximum computational power for large-scale training runs or complex inference tasks. This configuration provides the ultimate performance capability for organizations tackling the most demanding AI challenges.

Freestyle Mode distributes accelerators across multiple users or applications, enabling efficient resource utilization when full system power isn’t required. Organizations can dynamically allocate GPU resources based on current demands, maximizing return on infrastructure investments.

Swarm Mode extends beyond single-node boundaries, connecting multiple SuperNODE systems for workloads requiring hundreds of accelerators. This configuration maintains FabreX’s low-latency characteristics while scaling to enterprise-class computational requirements.

Energy Efficiency and Total Cost Optimization

SuperNODE delivers significant efficiency improvements across multiple operational dimensions. Power consumption drops to 7 kilowatts per 32-GPU deployment, substantially lower than equivalent multi-server configurations. This reduction stems from eliminating redundant server components, networking equipment, and cooling infrastructure.

Rack space requirements decrease by 30% compared to traditional deployments, allowing organizations to achieve higher computational density within existing data center footprints. The simplified architecture reduces management overhead, cooling complexity, and network infrastructure requirements.

Total cost of ownership benefits extend beyond hardware savings. The single-node architecture eliminates expensive InfiniBand networking requirements, reduces software licensing complexity, and simplifies system administration. Organizations can deploy advanced AI capabilities without the operational complexity typically associated with large-scale compute clusters.

Software Integration Maintains Development Simplicity

One of SuperNODE’s most significant advantages lies in its software transparency. Applications developed for single-node environments run without modification on 32-GPU configurations, eliminating the code changes typically required for multi-node scaling.

Popular AI frameworks including PyTorch and TensorFlow operate natively on SuperNODE systems. Developers can focus on model innovation rather than distributed computing complexity, accelerating time-to-market for AI applications and research projects.

The platform includes comprehensive software bundles featuring NVIDIA AI Enterprise Essentials, Base Command Manager for infrastructure orchestration, and FabreX Fabric Manager for system configuration. All components arrive pre-configured and validated, enabling immediate deployment upon delivery.

Vendor Flexibility and Future-Proof Architecture

SuperNODE’s open architecture philosophy prevents vendor lock-in while ensuring compatibility with evolving accelerator technologies. The system supports current AMD Instinct MI300X and NVIDIA GPU families while maintaining upgradeability for future accelerator generations.

Component-level upgrade capabilities allow organizations to refresh specific subsystems independently. This modularity protects infrastructure investments while enabling adoption of next-generation technologies as they become available.

The FabreX foundation scales beyond single SuperNODE deployments, supporting rack-scale and multi-rack configurations for organizations requiring massive computational resources. This scalability ensures that initial SuperNODE investments remain valuable as computational requirements grow.

Real-World Impact on AI Development Workflows

Industry professionals highlight SuperNODE’s practical benefits for AI development. “This is an incredible platform for HPC and ML/AI. It is really wild to see 32 GPUs appear on ROCm SMI!” notes Nick Malaya, AMD Fellow for HPC, emphasizing the system’s seamless integration with existing tools.

Greg Diamos, Co-founder and CTO of Lamini, emphasizes operational benefits: “The SuperNODE means less time messing with infrastructure and faster time to running and optimizing LLMs.” This perspective underscores how SuperNODE enables teams to focus on AI innovation rather than infrastructure management.

Research organizations and enterprises adopting SuperNODE report significant reductions in model development cycles, improved resource utilization rates, and simplified operational procedures. The platform’s ease of deployment and management enables smaller teams to tackle previously inaccessible AI challenges.

Market Recognition and Industry Adoption

SuperNODE has garnered significant attention across technology media and industry publications. Coverage spans technical publications including HPCwire, The Next Platform, and InsideHPC, as well as consumer technology outlets recognizing the platform’s innovative approach to compute architecture.

Notable applications include complex computational fluid dynamics simulations, with one deployment creating a 40 billion cell simulation of the Concorde supersonic aircraft landing sequence. This 33-hour computation across 32 AMD MI210 GPUs demonstrates SuperNODE’s capability for demanding scientific and engineering workloads.

TensorWave’s deployment featuring AMD MI300X accelerators represents one of the largest SuperNODE orders to date, indicating growing enterprise adoption for production AI workloads. Such deployments validate SuperNODE’s readiness for mission-critical applications beyond research and development environments.

Strategic Implications for AI Infrastructure

SuperNODE represents a fundamental shift in AI infrastructure philosophy, moving away from complex distributed systems toward simplified, high-performance single-node architectures. This approach aligns with the industry trend toward larger, more capable accelerators that benefit from tight coupling and high-bandwidth communication.

Organizations evaluating AI infrastructure strategies should consider SuperNODE’s unique position in addressing both current performance requirements and future scalability needs. The platform’s combination of immediate deployment capability, operational simplicity, and architectural flexibility provides a compelling alternative to traditional scale-out approaches.

As AI workloads continue growing in complexity and computational requirements, infrastructure solutions that minimize overhead while maximizing accelerator utilization become increasingly valuable. SuperNODE’s proven performance characteristics and simplified management model position it as a strategic asset for organizations serious about AI development and deployment.

If you are interested in this topic, we suggest you check our articles:

- The Intersection of AI and Quantum Computing

- Neuromorphic Computing: Learn About AI Mimicking the Human Brain

- Rapid AI Development: 3 Key Reasons

Sources: GigaIO

Written by Alius Noreika