When working with AI and developing this kind of apps, having access to powerful GPU resources is essential for developing, training, and deploying AI models. Having tools to facilitate this process and make it cost-effective is a nice-to-have feature. In this regard, RunPod is a serious contender in this market segment, operating among the leading cloud platforms specifically designed for AI workloads, offering small teams and enterprises the ability to deploy full-stack AI applications without managing complex infrastructure.

What is RunPod?

RunPod is a globally distributed GPU cloud platform built specifically for AI and machine learning workloads. Founded by Zhen Lu and Pardeep Singh, RunPod provides a unified cloud ecosystem for AI training, fine-tuning, and inference. The platform secured a $20M seed investment co-led by Intel Capital and Dell Technologies Capital in 2025, highlighting the industry’s confidence in their mission to make AI cloud computing both accessible and affordable without compromising on features or user experience.

Key Features That Set RunPod Apart

Lightning-Fast Deployment

One of the most frustrating aspects of cloud computing is waiting for resources to spin up. Traditional platforms can take upwards of 10 minutes to boot pods, creating significant delays in development workflows. RunPod has revolutionized this process by cutting cold-boot time down to milliseconds, allowing developers to start building within seconds of deploying their pods. For serverless deployments, RunPod’s Flashboot technology enables sub-250ms cold start times, eliminating the usual wait for GPUs to warm up when usage is unpredictable.

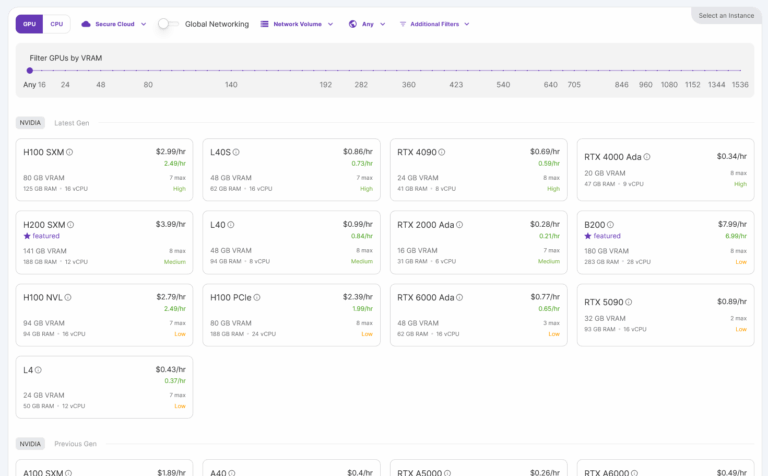

Powerful GPU Selection

RunPod offers an impressive array of GPU options to suit every workload and budget:

- High-End Enterprise GPUs: NVIDIA H100 PCIe (80GB VRAM), A100 PCIe and SXM (80GB VRAM), and AMD MI300X (192GB VRAM) for the most demanding AI training and inference tasks

- Mid-Range Professional GPUs: NVIDIA A40, L40, L40S, and RTX A6000 (all with 48GB VRAM)

- Cost-Effective Options: RTX A5000, RTX 4090, RTX 3090, and RTX A4000 Ada for more budget-conscious projects

This diverse selection ensures that users can find the right balance between performance and cost for their specific AI applications.

Flexible Deployment Options

RunPod provides multiple ways to deploy AI workloads:

- GPU Pods: Container-based GPU instances that spin up in seconds, ideal for development and training

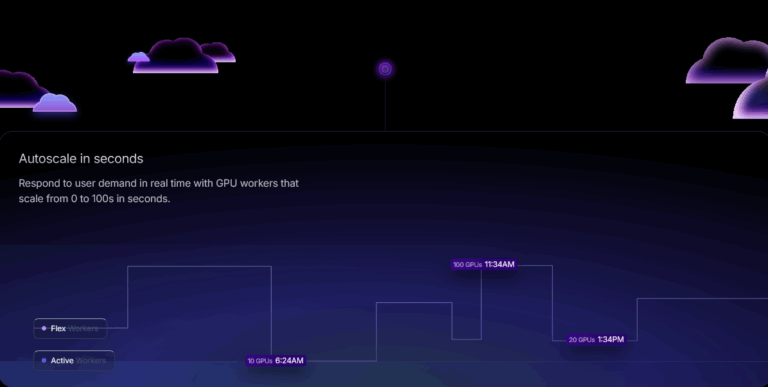

- Serverless Endpoints: Auto-scaling serverless deployments with job queueing for production inference workloads

- vLLM Endpoints: Lightning-fast OpenAI-compatible endpoints for large language models

- Instant Clusters: Multi-GPU clusters that scale from 2 to 50+ GPUs with high-speed interconnects

- Bare Metal: Direct access to physical hardware for maximum control and performance

Comprehensive Template Library

RunPod offers over 50 ready-to-use templates that allow users to get started instantly with frameworks like PyTorch, TensorFlow, and other preconfigured environments tailored for machine learning workflows. Additionally, users can create and configure their own custom templates to fit their specific deployment needs, with support for both public and private image repositories.

Robust Storage Solutions

The platform provides network storage volumes backed by NVMe SSDs with up to 100Gbps network throughput. RunPod supports storage sizes of 100TB+ and can accommodate even larger requirements (1PB+) for enterprise customers. This high-performance storage ensures that data-intensive AI workloads run smoothly and efficiently.

Advanced Monitoring and Analytics

RunPod delivers comprehensive monitoring capabilities that are essential for managing AI deployments:

- Usage Analytics: Real-time analytics for endpoints with metrics on completed and failed requests

- Execution Time Analytics: Detailed metrics on execution time, delay time, cold start time, GPU utilization, and more

- Real-Time Logs: Descriptive, real-time logs showing exactly what’s happening across active and flex GPU workers

RunPod Cloud Options

RunPod offers two distinct cloud environments to match different user needs:

Secure Cloud

The Secure Cloud provides high-reliability infrastructure in enterprise-grade data centers (Tier 3 or Tier 4 standards) with enhanced security measures. This option is ideal for businesses with sensitive workloads or compliance requirements. RunPod has obtained SOC2 Type 1 Certification as of February 2025, and their data center partners maintain leading compliance standards including HIPAA, SOC2, and ISO 27001.

Community Cloud

The Community Cloud leverages a peer-to-peer GPU computing model, offering potentially lower prices but with less guaranteed uptime. This option is perfect for cost-sensitive users, researchers, or projects where occasional interruptions are acceptable.

Pricing criteria

RunPod employs a transparent pay-as-you-go pricing model with no hidden costs:

- GPU Instances: Charged hourly based on GPU type, ranging from $0.16/hr for an RTX A5000 on Community Cloud to $2.49/hr for an AMD MI300X on Secure Cloud

- Serverless Usage: Charged per request, allowing users to scale from zero to hundreds of instances as needed

- No Extra Costs: Unlike many cloud providers, RunPod doesn’t charge for data transfer (ingress/egress)

A minimum $10 account load is required to get started, but this covers multiple hours of usage even on high-end GPUs.

Ideal Use Cases

RunPod serves a diverse range of users and applications:

AI Model Training

RunPod is perfect for running machine learning training tasks that can take up to 7 days. Users can train on available NVIDIA H100s and A100s or reserve AMD MI300Xs and AMD MI250s for extended periods. The platform’s high-performance GPUs and flexible deployment options make it ideal for training large language models, computer vision systems, and other compute-intensive AI applications.

AI Model Fine-Tuning

The Fine-tuner feature simplifies the process of customizing existing open-source models with proprietary data. Instead of training models from scratch, users can leverage pre-trained models and adapt them to specific use cases, saving significant time and computational resources.

AI Inference at Scale

RunPod’s serverless infrastructure handles millions of inference requests daily, allowing users to scale their machine learning inference while keeping costs low. With autoscaling capabilities, workloads can expand from zero to hundreds of instances based on demand, with the platform handling all the operational aspects from deployment to scaling.

Full-Stack AI Application Development

By providing both development environments and production infrastructure, RunPod enables teams to build and deploy complete AI applications without switching between different platforms. This unified approach streamlines the development process and reduces operational complexity.

Who Uses RunPod?

RunPod serves various segments of the AI ecosystem:

- Startups: Cost-effective GPU access enables early-stage companies to develop AI solutions without massive infrastructure investments

- Academic Institutions: Researchers can access high-performance computing resources for experimental work and publications

- Enterprises: Larger organizations benefit from RunPod’s scalable infrastructure for production AI deployments

Getting Started with RunPod

Starting with RunPod is straightforward:

- Create a RunPod account (minimum $10 initial load)

- Select your desired GPU type based on your workload requirements

- Choose from available templates or upload your custom container image

- Deploy and connect to your pod or configure your serverless endpoint

- Start building, training, or deploying your AI applications

Developer Tools and Integration

RunPod provides several tools to enhance developer productivity:

- CLI Tool: Automatically hot-reload local changes while developing and deploy on Serverless when finished

- API Access: Powerful REST API for integrating RunPod’s capabilities into existing workflows and applications

- Docker Integration: Seamless support for containerized applications, ensuring portability and consistent environments

Security and Compliance

Security is a priority for RunPod, particularly in their Secure Cloud offering. The platform employs multi-tenant isolation to ensure workload separation, and the Secure Cloud provides enhanced security features for sensitive applications. RunPod’s SOC2 Type 1 Certification (as of February 2025) and their data center partners’ compliance with standards like HIPAA, SOC2, and ISO 27001 make it suitable for enterprises with strict security requirements.

Customer Support

RunPod offers multiple support channels:

- Community Discord for peer assistance

- Support chat for direct communication

- Email support at

- Comprehensive documentation for self-service

RunPod GPU Pricing Comparison and Analysis

When deploying AI workloads to the cloud, understanding the cost structure is crucial for budgeting and resource planning. RunPod offers a transparent pricing model with various GPU options across two distinct cloud environments. This comprehensive analysis examines RunPod’s pricing structure and provides insights to help you optimize your AI infrastructure costs.

RunPod offers two distinct cloud environments, each with different pricing structures and reliability guarantees:

Secure Cloud

The Secure Cloud provides high-reliability infrastructure in enterprise-grade data centers (Tier 3 or Tier 4 standards) with enhanced security measures. This option is designed for:

- Production workloads requiring consistent uptime

- Business-critical applications

- Projects with compliance requirements (SOC2, HIPAA, ISO 27001)

- Long-running training jobs that would be costly to restart

Community Cloud

The Community Cloud leverages a peer-to-peer GPU computing model, offering lower prices but with less guaranteed uptime. This option is ideal for:

- Researchers and students with limited budgets

- Startups in early development stages

- Projects where occasional interruptions are acceptable

- Testing and development environments

| GPU Model | VRAM | RAM | vCPUs | Secure Cloud Price ($/hr) | Community Cloud Price ($/hr) | Savings on Community |

|---|---|---|---|---|---|---|

| AMD MI300X | 192GB | 283GB | 24 | $2.49 | Not Available | N/A |

| NVIDIA H100 PCIe | 80GB | 188GB | 16 | $2.39 | $1.99 | 16.7% |

| NVIDIA A100 PCIe | 80GB | 117GB | 8 | $1.64 | $1.19 | 27.4% |

| NVIDIA A100 SXM | 80GB | 125GB | 16 | $1.89 | Not Available | N/A |

| NVIDIA L40S | 48GB | 71GB | 12 | $0.86 | $0.79 | 8.1% |

| NVIDIA L40 | 48GB | 94GB | 8 | $0.99 | $0.69 | 30.3% |

| NVIDIA A40 | 48GB | 48GB | 9 | $0.40 | Not Available | N/A |

| NVIDIA RTX A6000 | 48GB | 50GB | 8 | $0.49 | $0.33 | 32.7% |

| NVIDIA RTX 4090 | 24GB | 29GB | 6 | $0.69 | $0.34 | 50.7% |

| NVIDIA RTX A5000 | 24GB | 25GB | 3 | $0.26 | $0.16 | 38.5% |

| NVIDIA RTX 3090 | 24GB | 24GB | 4 | $0.43 | $0.22 | 48.8% |

| NVIDIA RTX A4000 Ada | 20GB | 47GB | 9 | $0.34 | $0.20 | 41.2% |

| RTX 3080 | 10GB | 16GB | 3 | Not Listed | $0.17 | N/A |

Monthly Cost Estimates (730 hours)

| GPU Model | Secure Cloud Monthly Cost | Community Cloud Monthly Cost | Monthly Savings |

|---|---|---|---|

| AMD MI300X | $1,817.70 | N/A | N/A |

| NVIDIA H100 PCIe | $1,744.70 | $1,452.70 | $292.00 |

| NVIDIA A100 PCIe | $1,197.20 | $868.70 | $328.50 |

| NVIDIA RTX A6000 | $357.70 | $240.90 | $116.80 |

| NVIDIA RTX 4090 | $503.70 | $248.20 | $255.50 |

| NVIDIA RTX 3090 | $313.90 | $160.60 | $153.30 |

Pricing Comparison with Major Cloud Providers (Estimated)

One of the most compelling aspects of RunPod’s pricing is how it compares to major cloud providers like AWS, GCP, and Azure. RunPod offers significant cost savings, with prices for high-end GPUs like the A100 typically being 65-75% lower than equivalent options from major cloud providers.

For instance, an NVIDIA A100 80GB that costs around $1.19/hour on RunPod’s Community Cloud might cost $3.67-$4.10/hour on major cloud platforms. This represents potential savings of thousands of dollars per month for teams running continuous workloads.

| GPU Model | RunPod Community ($/hr) | AWS ($/hr) | GCP ($/hr) | Azure ($/hr) |

|---|---|---|---|---|

| NVIDIA A100 80GB | $1.19 | $4.10 | $3.67 | $3.67 |

| NVIDIA A10/L40 | $0.69 | $1.23 | $1.20 | $1.29 |

| Consumer GPUs (RTX series) | $0.16-$0.34 | Not Offered | Not Offered | Not Offered |

Note: Prices for major cloud providers are estimates and may vary based on region, commitment terms, and special offers. The table presents approximate on-demand pricing for comparable GPU instances as of May 2025.

Cost-Effectiveness Analysis

Community vs. Secure Cloud Value Proposition

The Community Cloud consistently offers lower prices across almost all GPU models, with savings ranging from 8% to over 50% compared to Secure Cloud options. The most dramatic savings are seen with consumer-grade GPUs like the RTX 4090, where Community Cloud prices are nearly half of Secure Cloud prices.

Unique Access to Consumer GPUs

RunPod offers consumer-grade GPUs like the RTX series, which are generally not available from major cloud providers. This gives budget-conscious users access to decent GPU power at exceptionally low entry prices (as low as $0.16/hour for an RTX A5000).

Additional Cost Advantages

- No data transfer charges: Unlike most major cloud providers, RunPod doesn’t charge for data ingress or egress, potentially saving significant amounts for data-intensive workloads.

- Low entry barrier: The minimum account load is only $10, making it accessible for experimentation.

- Pay-as-you-go model: Users only pay for the time their pods are running, with no minimum commitments.

Price-Performance Analysis

When comparing GPUs within RunPod’s offerings, the price-performance ratio reveals interesting insights:

Best Value Options

- RTX A5000 ($0.16/hr on Community Cloud): With 24GB VRAM, it offers excellent value for many deep learning tasks that don’t require the latest architecture.

- RTX 4090 ($0.34/hr on Community Cloud): Provides impressive compute performance for generative AI tasks at a fraction of the cost of enterprise GPUs.

- RTX A4000 Ada ($0.20/hr on Community Cloud): Good balance of modern architecture and reasonable VRAM (20GB) at a budget-friendly price.

Production Workhorses

- A100 PCIe ($1.19/hr on Community Cloud): Remains a balanced choice for production workloads, with its 80GB VRAM providing ample memory for large models.

- H100 PCIe ($1.99/hr on Community Cloud): While considerably more expensive, delivers significant performance improvements for certain workloads that can justify the premium.

Specialized Options

- AMD MI300X ($2.49/hr on Secure Cloud only): Offers massive 192GB VRAM and substantial system memory (283GB), making it ideal for extremely large models or memory-intensive applications.

- L40/L40S ($0.69-$0.79/hr on Community Cloud): Good options for workloads that benefit from latest-generation tensor cores but don’t require H100/A100 level performance.

Strategic Usage to Optimize Costs

Based on RunPod’s pricing structure, users can implement several strategies to optimize costs:

- Hybrid Approach: Use Community Cloud for development and testing, then switch to Secure Cloud for production deployments.

- Right-sizing: Choose the appropriate GPU for specific workloads rather than defaulting to the highest-end option. For instance, many fine-tuning tasks work perfectly well on RTX 4090s at a fraction of A100 costs.

- Resource Consideration: Factor in the RAM and vCPU allocations alongside GPU specifications. Some models (like the L40) offer significantly more system resources which might reduce the need for multi-GPU setups.

- Serverless for Intermittent Workloads: For inference or occasional processing, leverage RunPod’s serverless options to avoid paying for idle time.

- Usage Monitoring: Keep track of pod usage and costs, as RunPod automatically stops pods when funds are insufficient for 10 more minutes of runtime. While volume data is preserved, container disk data is lost when pods stop due to insufficient funds.

- Spot vs. OnDemand: Consider using Spot instances for interruptible workloads, which can save up to 50% compared to OnDemand prices.

Conclusion

RunPod has established itself as a compelling cloud platform for AI workloads, offering the perfect balance of performance, flexibility, and cost-effectiveness. By focusing specifically on the needs of AI developers and researchers, RunPod delivers a streamlined experience that eliminates much of the operational overhead associated with managing GPU infrastructure.

No matter if you’re a startup building your first AI prototype, a researcher training complex models, or an enterprise deploying production AI systems, RunPod provides the tools and resources to accelerate your workflow and reduce costs. With its globally distributed infrastructure, diverse GPU options, and advanced serverless capabilities, RunPod is well-positioned to support the growing demand for AI computing resources in an increasingly AI-driven world.

For AI developers and teams looking to focus more on building intelligent applications and less on managing infrastructure, RunPod offers a very attractive solution that combines power, flexibility, and affordability in a single, cohesive platform.

If you are interested in this topic, we suggest you check our articles:

- Scalenut: Boost your SEO Performance with AI Content Research & Copywriting

- Frase: AI SEO Assistant for Optimized Content Creation

- Hypotenuse AI: A Marketing Solution using AI for CMS Platforms

Sources: RunPod.io

Written by Alius Noreika