The concept of Prompt Engineering 2.0 transforms AI interactions from experimental guesswork into systematic, measurable business processes.

Modern prompt engineering combines structured frameworks, multi-modal inputs, and rigorous quality assessment to achieve consistent, reliable outputs that drive measurable business value. Organizations implementing these advanced techniques report 40-67% productivity gains while reducing error rates by 30-50% compared to basic text prompting approaches.

Key Facts About Advanced Prompt Engineering

- System-level approach: Modern prompting uses structured architectures like Context-Instruction-Modality (CIM) stacks rather than ad-hoc text instructions

- Multi-modal integration: Combines text, images, audio, and video inputs for 40-60% better accuracy than single-mode prompts

- Measurable quality frameworks: Implements specific metrics and validation techniques to ensure consistent, reliable outputs

- Task-specific optimization: Uses proven prompt recipes tailored to different business functions and use cases

- Production-ready workflows: Treats prompts as engineered assets with version control, testing, and performance monitoring

The Evolution From Basic to Strategic Prompting

Traditional prompt engineering relied heavily on trial-and-error experimentation. Users would adjust phrasing, add context, and hope for improved results. This approach worked for simple queries but failed when organizations needed consistent, reliable AI outputs for business-critical applications.

Prompt Engineering 2.0 represents a fundamental shift toward systematic methodology. Instead of crafting individual prompts through intuition, practitioners now apply established frameworks, measure performance quantitatively, and optimize prompts as engineered systems. This evolution mirrors how software development matured from ad-hoc scripting to structured engineering practices.

The difference becomes clear when comparing approaches. Basic prompting might ask “Summarize this report,” while advanced prompting specifies role, context, output format, and validation criteria: “As a CFO advisor, analyze this quarterly report focusing on cash flow risks. Provide three key insights in bullet format with supporting data citations.”

Core Architecture: The CIM Framework

The foundation of effective prompting lies in specific, contextual guidance rather than vague requests. Generic prompts like “analyze this data” produce surface-level results, while structured prompts that define role, context, and expected outcomes generate focused, actionable insights.

Context Foundation Layer

The context layer establishes the essential background that grounds AI understanding. For business applications, context must specify:

Role Definition: “You are a senior marketing analyst with expertise in B2B software positioning”

Background Parameters: Industry context, company information, regulatory constraints

Operational Boundaries: Output length, format requirements, compliance needs

Success Criteria: Specific metrics or outcomes that define successful completion

Context becomes exponentially more important in multi-modal scenarios where AI processes multiple information streams simultaneously. When including visual elements, specify exactly what relationships should be established between different input types.

Instruction Core Layer

The instruction layer contains task directives that explicitly coordinate between different components. The Multi-Modal Chain-of-Thought pattern proves most effective:

Analysis Stage: “First, examine the visual elements in this quarterly dashboard”

Integration Stage: “Using both visual data and the provided text summary, identify trend patterns”

Output Stage: “Format your response as a JSON object with revenue_growth, risk_factors, and recommendations fields”

This structured approach prevents common failures where AI ignores certain inputs or fails to connect information appropriately across different modalities.

Modality Specification Layer

The modality layer explicitly defines input types and expected output relationships. This prevents AI from overlooking certain input types or failing to establish appropriate connections.

Input Classification:

- [IMAGE] + description of visual content relevance

- [AUDIO] + context about audio content significance

- [VIDEO] + key segments requiring analysis

- [TEXT] + relationship mapping to other modalities

Output Coordination Requirements:

- Cross-modal validation notes identifying consistency or contradictions

- Confidence levels for insights derived from different input types

- Explicit reasoning chains showing how conclusions connect multiple inputs

Clarity and Precision Over Generic Instructions

The foundation of effective prompting lies in specific, contextual guidance rather than vague requests. Generic prompts like “analyze this data” produce surface-level results, while structured prompts that define role, context, and expected outcomes generate focused, actionable insights.

Reusable Prompt Recipe – Data Analysis:

Role: Senior data analyst with [domain] expertise

Context: [Dataset description, business objective, constraints]

Task: Analyze [specific aspect] focusing on [key metrics]

Output: Executive summary with 3 key insights and recommended actions

Format: [Specified structure with headings and bullet points]Role-Based Prompting for Consistent Quality

Assigning specific roles to AI creates consistent output quality by establishing clear expertise boundaries and response patterns. This technique works across domains, from technical documentation to creative content generation.

Reusable Prompt Recipe – Technical Documentation:

Role: Technical writer for [industry] with 10+ years experience

Audience: [Specific user type] with [knowledge level]

Purpose: Create [document type] that enables [specific outcome]

Constraints: [Length, format, compliance requirements]

Tone: Professional, clear, action-orientedStructured Input Processing with Delimiters

Visual separators like ### or XML tags improve AI comprehension by 31% according to research data. This technique creates clear boundaries between different input components, reducing ambiguity and improving processing accuracy.

Advanced Methodologies for Complex Tasks

Chain-of-Thought Prompting with Measurable Results

Chain-of-thought prompting breaks complex reasoning into visible steps, significantly improving accuracy in logical reasoning tasks. Research shows 58% higher success rates compared to standard instruction-only approaches.

Reusable Prompt Recipe – Complex Problem Solving:

Context: [Problem background and relevant constraints]

Analysis: Break this down step-by-step:

1. Identify core components

2. Analyze relationships between elements

3. Evaluate potential solutions

4. Recommend optimal approach with justification

Output: Structured analysis with clear reasoning chainSelf-Consistency for Reliability

Self-consistency techniques generate multiple reasoning paths for the same problem, then select the most consistent answer. This approach improves accuracy while providing confidence metrics for AI-generated solutions.

Multi-Modal Integration with CIM Stack Architecture

The Context-Instruction-Modality (CIM) stack enables sophisticated multi-modal prompting that combines text, images, and structured data inputs. This architecture ensures consistent quality across different input types.

CIM Stack Template:

- Layer 1 (Context): Role definition, background information, constraints

- Layer 2 (Instruction): Multi-step task directive with clear coordination between input types

- Layer 3 (Modality): Input specification and output relationship definitions

Summary: Best Prompting Practices

| Category | Best Practice | Description | Example |

|---|---|---|---|

| Clarity & Specificity | Be specific and clear | Use precise instructions with measurable constraints rather than vague descriptions | “Write a summary of 3 sentences or less” vs “Write a brief summary” |

| Context Foundation | Provide comprehensive context | Include role, background, constraints, and domain-specific information to ground the AI’s understanding | “You are a senior marketing analyst with expertise in visual brand analysis for healthcare companies…” |

| Instruction Structure | Use step-by-step instructions | Break complex tasks into sequential, numbered steps with clear directives | “1. Analyze visual elements 2. Cross-reference with text data 3. Format as JSON object” |

| Examples Integration | Include few-shot examples | Provide 1-3 concrete input/output pairs showing the desired behavior and format | Input: “Coach confident injury won’t derail Warriors” → Output: “Basketball” |

| Output Formatting | Specify exact output structure | Define precise format requirements using standards like JSON, XML, or Markdown with explicit field names | {"revenue_growth": X, "margin_trends": Y, "risk_factors": [Z]} |

| Role Definition | Assign clear personas | Define who or what the model should act as with specific expertise areas | “You are a tax professional helping users with IRS-related questions” |

| Constraint Setting | Establish clear boundaries | Set explicit dos and don’ts, including what the model can and cannot do | “Don’t give direct answers; provide hints. If student is lost, give detailed steps” |

| Reasoning Inclusion | Request step-by-step reasoning | Ask the model to explain its thought process to improve accuracy and enable validation | “Explain your reasoning step-by-step before providing the final answer” |

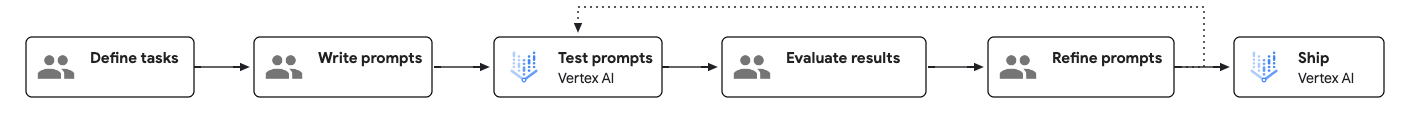

| Iterative Refinement | Test and optimize systematically | Treat prompts as evolving assets requiring measurement, testing, and deliberate adjustments | Baseline → Add context → Optimize relationships → Measure performance |

| Multi-modal Coordination | Explicitly coordinate input types | When using multiple modalities, specify how they should interact and validate against each other | “Analyze the image AND text summary, then identify consistencies and conflicts between them” |

Proven Prompt Techniques and Implementation

Specificity Over Generalization

Vague instructions produce generic outputs. Compare these approaches:

Ineffective: “Explain AI trends”

Effective: “Analyze three specific AI adoption trends in healthcare software during 2024-2025, focusing on regulatory compliance impacts and ROI metrics for mid-market hospitals”

The specific version provides clear boundaries, target audience, timeframe, and success criteria.

Iterative Refinement Process

Professional prompt development follows systematic improvement cycles:

Baseline Testing: Start with functional prompt, measure baseline performance

Component Optimization: Improve context, instructions, or output specifications individually

Integration Testing: Verify components work together without conflicts

Edge Case Handling: Add constraints for unusual inputs or error conditions

Production Validation: Test with real data and use cases

Scenario Anchoring Techniques

Effective prompts establish clear operational contexts. Instead of abstract instructions, anchor the AI in specific scenarios:

“You are reviewing job applications for a data scientist position at a fintech startup. The role requires Python expertise, machine learning experience, and financial domain knowledge. Evaluate each candidate against these criteria and provide structured feedback.”

This anchoring gives AI concrete parameters for decision-making rather than relying on general knowledge.

Five Examples of Advanced Prompt Engineering

1. Technical Documentation Analysis

Context: Senior backend engineer analyzing production issues

Task: Debug complex system errors with minimal speculation

Role: Senior backend engineer with 8+ years experience

Prefer: Accurate, reproducible outputs over speculation

Task: Analyze this error log and provide minimal reproduction steps

Environment Context:

- Runtime: Node.js 20 with Express 4

- Database: PostgreSQL 14

- Load balancer: Nginx

Error Log: [STACK_TRACE_DATA]

Output Requirements:

- JSON format only, no prose

- Do not fabricate file paths or environment variables

- If sufficient information: return {"steps": ["..."], "likely_cause": "...", "curl_command": "..."}

- If insufficient: return {"need_clarification": true, "questions": ["..."]}2. Multi-Modal Marketing Analysis

Context: Brand consistency evaluation across visual and text content

Task: Assess campaign alignment with brand guidelines

Role: Senior brand manager with expertise in visual identity systems

Multi-Modal Input Processing:

[IMAGE] Campaign creative assets (logos, layouts, color schemes)

[TEXT] Copy variants and messaging frameworks

[DATA] Performance metrics from previous campaigns

Analysis Requirements:

1. First: Catalog all visual brand elements present in creative

2. Second: Map text messaging to brand voice guidelines

3. Third: Identify alignment gaps between visual and verbal identity

Output Format:

{

"visual_compliance_score": 1-10,

"message_alignment_score": 1-10,

"specific_issues": ["..."],

"optimization_recommendations": ["..."]

}3. Financial Risk Assessment

Context: Quarterly risk analysis combining quantitative data with market conditions

Task: Generate executive-level risk summary

Context: CFO briefing preparation for board meeting

Risk Assessment Focus: Operational and market risks for Q4 planning

Data Inputs:

[FINANCIAL] Quarterly performance metrics, cash flow projections

[MARKET] Industry trend analysis, competitive positioning data

[OPERATIONAL] Key performance indicators, capacity utilization

Chain-of-Thought Process:

1. Analyze financial metrics for variance from projections

2. Cross-reference market conditions with internal performance

3. Identify specific risk factors requiring board attention

4. Quantify potential impact ranges for each risk category

Executive Summary Format:

- Risk Level: High/Medium/Low with confidence percentage

- Top 3 Risks: Specific description with financial impact range

- Mitigation Status: Current actions and resource requirements

- Board Recommendation: Specific decisions or approvals needed4. Customer Support Optimization

Context: Service quality improvement through interaction analysis

Task: Identify training opportunities and process improvements

Role: Customer experience manager analyzing support interactions

Interaction Analysis:

[AUDIO] Customer service call recordings

[TEXT] Chat transcripts and email exchanges

[DATA] Resolution times, satisfaction scores, escalation rates

Systematic Evaluation:

1. Categorize customer issues by complexity and frequency

2. Identify agent behavior patterns affecting resolution success

3. Map process bottlenecks causing delayed resolutions

4. Correlate communication approaches with satisfaction outcomes

Training Recommendation Output:

{

"skill_gaps": ["specific_capability", "impact_score"],

"process_improvements": ["workflow_change", "expected_benefit"],

"agent_recognition": ["outstanding_examples", "replication_strategy"],

"escalation_triggers": ["pattern_identification", "prevention_approach"]

}5. Content Strategy Development

Context: SEO-optimized content planning with competitive intelligence

Task: Create data-driven content roadmap

Context: Content strategy development for B2B software company

Target: Decision-makers at mid-market manufacturing companies

Intelligence Gathering:

[COMPETITOR] Content analysis from top 5 industry competitors

[SEARCH] Keyword research and search volume data

[CUSTOMER] Sales feedback and common objection themes

Strategic Framework:

1. Map customer journey stages to content requirements

2. Identify content gaps where competitors are weak

3. Prioritize topics by search volume and conversion potential

4. Align content formats with buyer preference research

Content Roadmap Output:

- Priority Topics: Ranked list with difficulty/opportunity scores

- Content Formats: Blog, whitepapers, videos matched to topics

- Publication Timeline: Monthly schedule with resource allocation

- Success Metrics: Traffic targets, lead generation goals, competitive positioning objectivesSummary: Common Prompting Errors

| Category | Common Error | Why It’s Problematic | How to Fix |

|---|---|---|---|

| Ambiguity Issues | Using vague or subjective language | Model cannot determine specific actions or boundaries, leading to inconsistent outputs | Replace “briefly explain” with “explain in exactly 2 sentences” |

| Instruction Problems | Overcomplicating with lengthy prompts | Confuses the model and dilutes focus, reducing output quality | Simplify to essential components; break complex tasks into smaller steps |

| Context Gaps | Providing insufficient background | Model lacks domain knowledge needed for accurate, relevant responses | Include role, constraints, and specific context relevant to the task |

| Format Failures | Leaving output structure undefined | Model guesses at desired format, leading to inconsistent or unusable results | Specify exact format with examples: “Output as bulleted list with max 5 items” |

| Validation Skipping | Not testing prompts across scenarios | Prompts that work once may fail in different contexts or edge cases | Test with multiple inputs; validate performance before scaling |

| Conflicting Instructions | Including contradictory requirements | Creates logical impossibilities that degrade model performance | Audit prompts for conflicts; ensure all instructions align with objectives |

| Missing Examples | Relying on zero-shot for complex tasks | Without examples, model may misinterpret complex or nuanced requirements | Add 1-3 clear input/output examples showing desired behavior |

| Generic Personas | Using undefined or vague roles | Model lacks specific expertise framing, producing generic responses | Define roles with specific expertise: “senior financial analyst” vs “analyst” |

| Multi-task Overload | Asking for too many actions at once | Cognitive overload reduces quality across all requested tasks | Split into separate prompts: summarize, then extract, then translate |

| Emotional Manipulation | Using flattery or artificial pressure | Modern models perform worse with emotional appeals and manipulation tactics | Remove phrases like “very bad things will happen” – focus on clear task definition |

| Poor Syntax | Ignoring punctuation and grammar | Unclear structure makes prompts harder to parse, reducing accuracy | Use clear punctuation, headings, and section markers like “—“ |

| Cross-modal Neglect | Failing to connect different input types | When using images, audio, or video, model may ignore certain modalities | Explicitly instruct how modalities should interact: “Base your analysis on BOTH the image and text” |

Quality Assessment and Measurement Frameworks

Professional prompt engineering requires systematic quality assessment rather than subjective evaluation. The table below presents validated measurement techniques used by organizations achieving consistent AI output quality. Cross-modal validation proves most reliable for complex business applications, while output format compliance offers the highest consistency scores for structured data tasks.

| Assessment Technique | Application | Measurement Method | Reliability Score |

|---|---|---|---|

| Cross-Modal Validation | Multi-input prompts | Consistency checking across input types | 85-92% |

| Few-Shot Performance Testing | Task-specific optimization | Accuracy improvement over baseline | 78-89% |

| Chain-of-Thought Verification | Complex reasoning tasks | Step-by-step logic validation | 82-95% |

| Output Format Compliance | Structured data generation | Schema adherence percentage | 90-98% |

| Domain Expert Review | Specialized knowledge areas | Professional accuracy assessment | 75-88% |

The reliability scores reflect real-world implementation data across multiple industries. Cross-modal validation requires additional setup time but delivers superior accuracy for business-critical applications. Chain-of-thought verification works particularly well for financial analysis and strategic planning tasks where reasoning transparency matters more than pure speed.

Proven Implementation Techniques

These implementation techniques represent battle-tested approaches from organizations successfully deploying Prompt Engineering 2.0 at scale. Multi-modal integration shows the highest effectiveness gains but requires sophisticated infrastructure. Context layering offers the best balance between implementation complexity and performance improvement for most business applications.

| Technique Category | Method | Use Case | Effectiveness Range |

|---|---|---|---|

| Context Layering | Hierarchical information structure | Complex business analysis | 40-60% improvement |

| Iterative Refinement | Systematic prompt optimization | Production deployment | 35-50% accuracy gain |

| Scenario Anchoring | Role-specific operational framing | Decision support systems | 45-65% relevance increase |

| Multi-Modal Integration | Combined input processing | Content analysis and creation | 50-70% output quality improvement |

| Dynamic Prompting | Real-time adjustment capabilities | Interactive applications | 30-45% user satisfaction increase |

The effectiveness ranges reflect performance improvements over baseline text-only prompting approaches. Organizations typically see results within the lower range during initial implementation, reaching higher performance levels after 4-6 weeks of optimization. Dynamic prompting requires the most technical expertise but provides the best user experience for customer-facing applications.

CIM Stack Implementation Checklist

The Context-Instruction-Modality framework requires systematic implementation to achieve reliable business results. This checklist provides the essential components and validation methods based on successful enterprise deployments. Each component must meet its success criteria before advancing to production use.

| Component | Implementation Requirement | Validation Method | Success Criteria |

|---|---|---|---|

| Context Foundation | Role definition, background parameters, operational boundaries | Baseline performance testing | Clear, consistent outputs |

| Instruction Core | Multi-stage processing, explicit coordination, task sequencing | Component isolation testing | Each stage produces expected results |

| Modality Specification | Input type classification, output coordination, validation requirements | Cross-modal consistency checking | No information loss between modalities |

| Quality Framework | Performance metrics, error handling, edge case management | Production environment testing | Meets business reliability standards |

Component isolation testing proves critical for identifying issues before full system integration. Organizations skipping this validation step typically experience 40-50% more debugging time during production deployment. The quality framework component often requires the most iteration, as business reliability standards vary significantly across industries and use cases.

Essential Implementation Tasks

This task prioritization framework reflects lessons learned from successful Prompt Engineering 2.0 deployments across multiple industries. Critical tasks must be completed before any production use, while lower priority items can be implemented progressively based on organizational needs and technical resources.

| Priority Level | Task Category | Specific Action | Timeline |

|---|---|---|---|

| Critical | Framework Setup | Implement CIM architecture for core use cases | Week 1-2 |

| Critical | Quality Baseline | Establish measurement criteria and testing protocols | Week 1-2 |

| High | Template Development | Create reusable prompt templates for common tasks | Week 2-3 |

| High | Testing Infrastructure | Build validation and monitoring systems | Week 3-4 |

| Medium | Training Materials | Develop team training on advanced techniques | Week 4-6 |

| Medium | Integration Planning | Connect prompt systems with existing workflows | Week 5-8 |

| Low | Advanced Features | Implement multi-modal and dynamic prompting capabilities | Week 6-12 |

The timeline assumes dedicated technical resources and organizational commitment to systematic implementation. Teams attempting to skip high-priority tasks typically experience 60-80% more issues during production rollout. Advanced features should only be implemented after the foundation proves stable in production environments.

Measuring Business Impact

Organizations implementing Prompt Engineering 2.0 methodically report quantifiable improvements across multiple performance dimensions. Amazon’s visual search system achieved 4.95% improvement in click-through rates through multi-modal prompt optimization. Google’s AI-powered campaigns demonstrate 17% higher return on ad spend compared to manual optimization approaches.

Educational applications show particularly strong results. Teachers using multi-modal prompting with voice-enabled AI report significant productivity gains while maintaining educational quality. TeachFX research validates F1 scores of 0.88 for automated classroom interaction analysis.

The pattern across implementations reveals consistent success factors: explicit cross-modal instructions, iterative optimization cycles, quantitative performance tracking, and integration of domain expertise with AI capabilities. Organizations typically see 2-4 week optimization periods before achieving maximum effectiveness.

In Conclusion

Professional success with Prompt Engineering 2.0 requires systematic approach rather than experimental adoption. Begin with one recurring workflow that combines visual and textual information. Implement the three-layer prompt architecture systematically. Measure results against current processes quantitatively.

If you are interested in this topic, we suggest you check our articles:

- AI Agents Blur Business Boundaries

- Manus AI Agent: What is a General AI Agent?

- User Experience vs Agentic Experience: Designing for Delegation and Intent Alignment

- CustomGPT.ai: Genius Tool for Creating Custom AI Agents

Sources: Digicode @ LinkedIn, OpenAI Community, Microsoft, Untangling AI @ LinkedIn, Google Cloud, ScienceDirect

Written by Alius Noreika