Key Facts

- Microsoft’s microfluidic cooling removes heat up to three times more effectively than conventional cold plate technology

- Microchannels etched directly onto silicon chip surfaces allow coolant to flow where heat generates, reducing maximum GPU temperature rise by 65%

- AI chips produce exponentially more heat than previous generations, threatening to halt datacenter progress within five years without advanced cooling

- Traditional cold plates remain separated from heat sources by insulating layers that trap warmth and limit cooling efficiency

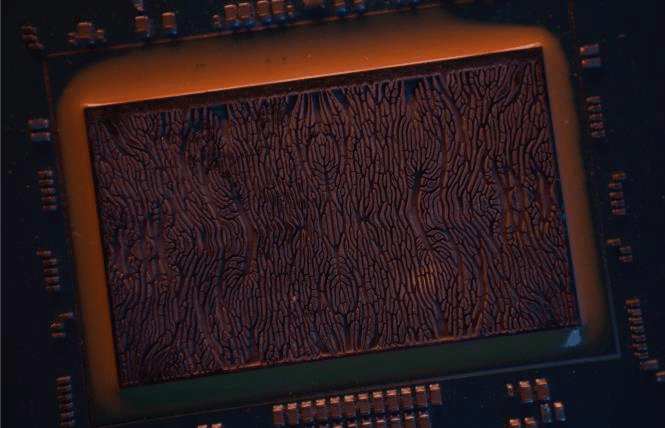

- AI algorithms optimize bio-inspired channel designs resembling leaf veins to direct coolant precisely toward chip hot spots

- Technology enables overclocking during demand spikes without damaging silicon, improving server performance and reducing infrastructure costs

Why AI Silicon Gets Dangerously Hot

Modern artificial intelligence processors generate substantially more thermal energy than earlier chip generations. Each computational cycle produces heat as electrical resistance converts power into warmth. Current AI workloads demand unprecedented processing density, cramming billions of transistors onto fingernail-sized surfaces that constantly switch on and off millions of times per second.

Microsoft has demonstrated microfluidic cooling technology that addresses this escalating thermal challenge by etching microscopic channels directly into silicon backplates. Coolant flows through these hair-width grooves, absorbing heat precisely where transistors generate it. Lab testing shows this approach removes heat three times more effectively than cold plates while reducing maximum GPU temperature spikes by 65 percent. The breakthrough arrives as traditional cooling methods approach physical limits that could constrain AI advancement within five years.

The Fundamental Problem with Current Cooling Methods

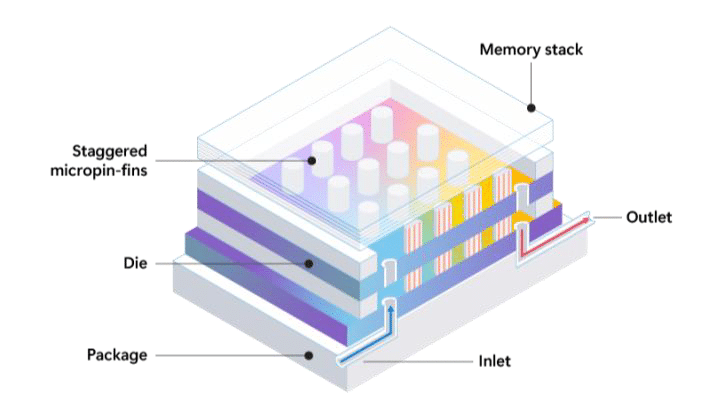

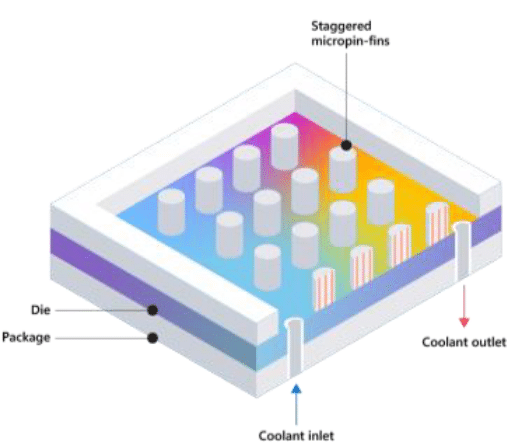

Render of microscopic pins where liquid coolant flow to absorb and dissipate heat. Image credit: Microsoft

Cold plates represent today’s advanced datacenter cooling standard. These metal plates sit atop processors, circulating chilled liquid through internal channels to draw heat away. The configuration suffers from a critical flaw: multiple insulating layers separate coolant from active silicon.

Chip manufacturers package processors with thermal spreaders, protective coatings, and interface materials designed to distribute heat and shield delicate components. These same materials function like blankets, trapping warmth inside and preventing cold plates from accessing heat sources directly. As AI chips grow more powerful, this thermal barrier becomes increasingly problematic.

“If you’re still relying heavily on traditional cold plate technology, you’re stuck,” said Sashi Majety, senior technical program manager for Cloud Operations and Innovation at Microsoft. Future processor generations will produce heat loads that exceed what separated cooling systems can handle, creating a hard ceiling on computational advancement.

How Microfluidic Systems Work Inside Silicon

Microfluidics brings coolant inside the chip itself rather than attempting to cool from outside. Engineers etch channels directly onto silicon backplates during manufacturing, creating grooves similar in width to human hair strands. Coolant flows through this network, making direct contact with silicon surfaces mere micrometers from active transistor cores.

This proximity eliminates insulating layers that hamper conventional cooling. Heat transfers immediately from silicon into flowing liquid rather than conducting through multiple material boundaries. The approach handles heat flux exceeding 1 kilowatt per square centimeter—two to three times what standard cold plates manage.

Microsoft partnered with Swiss startup Corintis to develop channel patterns inspired by biological systems. The final design mimics vein structures in leaves and butterfly wings, which evolution optimized for efficient fluid distribution. AI algorithms analyzed chip heat signatures and refined channel geometry to direct coolant precisely toward hot spots rather than using simple parallel grooves.

Engineering Challenges and Solutions

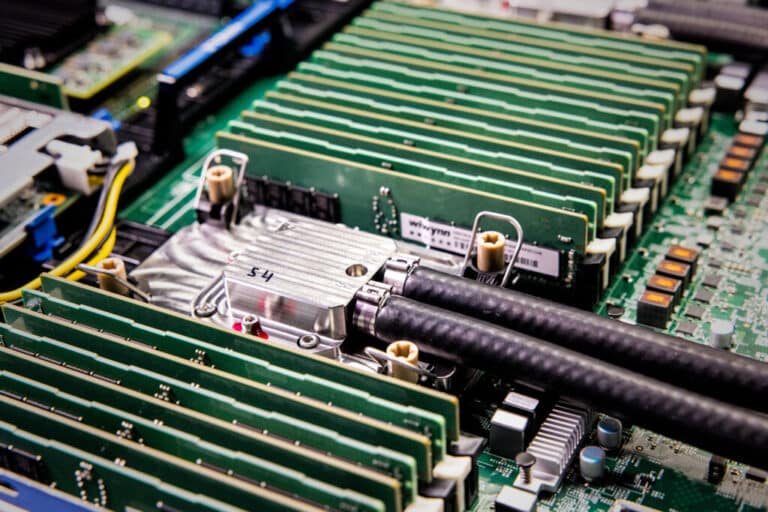

Microsoft has unveiled an innovative chip cooling method that uses microfluidic technology. The approach involves etching microscopic channels directly into silicon chips, enabling coolant to flow across the chip surface and extract heat more effectively. Photo credit: Dan DeLong for Microsoft.Retry

Creating functional microfluidic systems required solving numerous technical puzzles. Channel depth affects both cooling capacity and structural integrity. Grooves must be deep enough to circulate adequate coolant volume without clogging, yet shallow enough to avoid weakening silicon until it cracks under thermal stress.

The development team produced four design iterations within a single year, testing different etching methods and channel configurations. Each prototype required leak-proof packaging to contain pressurized coolant, custom formulation of cooling fluids optimized for microchannels, and manufacturing processes that integrate etching into standard chip production workflows.

“Systems thinking is crucial when developing a technology like microfluidics,” said Husam Alissa, director of systems technology in Cloud Operations and Innovation at Microsoft. “You need to understand systems interactions across silicon, coolant, server and the datacenter to make the most of it.”

Successful testing involved running actual server workloads. Microsoft configured a microfluidics-cooled processor to handle core services for simulated Teams meetings, demonstrating the technology functions reliably under real operational conditions rather than just laboratory benchmarks.

Performance Advantages Beyond Temperature Reduction

This microfluidics chip developed by Microsoft is covered and has tubing attached so the coolant can flow safely. Photo credit: Dan DeLong for Microsoft.

Microfluidic cooling enables operational capabilities impossible with conventional systems. Microsoft Teams exemplifies workload patterns that benefit from advanced thermal management. Teams comprises approximately 300 interconnected services handling meeting connections, audio merging, transcription, chat storage, and recording.

Usage patterns create predictable demand spikes. Most meetings start on the hour or half-hour, causing call controller services to experience intense activity from five minutes before until three minutes after these times, then idling between peaks.

Datacenters handle such fluctuations through two approaches: installing excess capacity that sits unused most of the time, or overclocking processors to temporarily boost performance. Overclocking increases heat production, limiting how aggressively chips can be pushed without risking thermal damage.

“Microfluidics would allow us to overclock without worrying about melting the chip down because it’s a more efficient cooler of the chip,” said Jim Kleewein, technical fellow for Microsoft 365 Core Management. “There are advantages in cost and reliability. And speed, because we can overclock.”

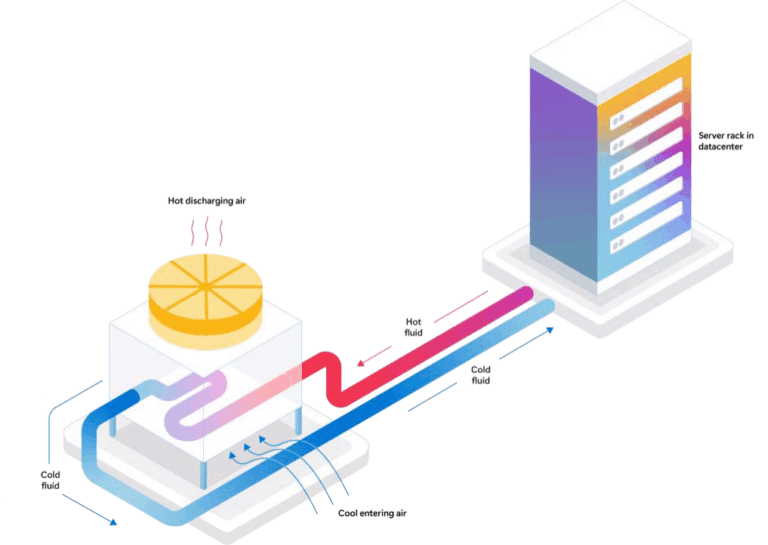

The technology also improves power usage effectiveness, a critical metric for datacenter energy efficiency. Since coolant contacts hot silicon directly, it doesn’t require extreme chilling to absorb heat effectively. This reduces energy spent on cooling infrastructure while improving overall thermal performance compared to cold plates that need lower temperatures to compensate for insulating layers.

Impact on Datacenter Design and Energy Consumption

Heat constraints currently limit how densely servers can be packed. Physical proximity reduces latency—the communication delay between machines—but concentrating processors creates thermal hotspots that cooling systems cannot adequately address.

Microfluidics allows tighter server spacing without overheating concerns. Datacenters could increase computational capacity within existing buildings rather than constructing additional facilities. This density improvement reduces both capital expenditure and environmental impact.

“If microfluidic cooling can use less power to cool the datacenters, that will put less stress on energy grids to nearby communities,” said Ricardo Bianchini, Microsoft technical fellow and corporate vice president for Azure specializing in compute efficiency.

Judy Priest, corporate vice president and chief technical officer of Cloud Operations and Innovation at Microsoft, emphasized the sustainability dimension: “Microfluidics would allow for more power-dense designs that will enable more features that customers care about and give better performance in a smaller amount of space.”

The approach also produces higher-quality waste heat suitable for recapture and reuse, contributing to datacenter sustainability goals including carbon negativity and water positivity.

Enabling Future Chip Architectures

Removing thermal constraints opens possibilities for entirely new processor designs. Three-dimensional chip stacking places multiple silicon layers vertically, drastically reducing signal travel distances and latency. Current thermal limitations make 3D architectures impractical because stacked chips concentrate heat in small volumes that conventional cooling cannot manage.

Microfluidics could flow liquid through stacked configurations using cylindrical pin structures between layers, functioning like pillars in multi-story parking structures with coolant circulating around them. This would enable 3D chips that deliver substantially better performance than single-layer designs.

“Anytime we can do things more efficiently and simplify this opens up the opportunity for new innovation where we could look at new chip architectures,” Priest said.

Enhanced cooling likewise permits more processor cores per chip or additional chips per rack, improving speed and enabling smaller datacenters with equivalent computational power.

Integration with Microsoft’s Silicon Strategy

Microfluidic development aligns with Microsoft’s broader hardware initiatives. The company invests over $30 billion per quarter in capital expenditures, including development of proprietary Cobalt and Maia chip families optimized for Microsoft and customer workloads.

Cobalt 100 processors already deliver energy-efficient compute power, scalability, and performance benefits. Microsoft pursues a systems approach that optimizes every component—silicon, packaging, boards, racks, servers, and datacenters—to work together cohesively rather than treating cooling as an isolated concern.

The company continues investigating how to incorporate microfluidic cooling into future generations of first-party chips while collaborating with fabrication and silicon partners to bring the technology into production across datacenters.

Industry-Wide Implications

Microsoft positions microfluidics as an industry solution rather than proprietary advantage. Making the technology widely accessible accelerates development and benefits all datacenter operators.

“We want microfluidics to become something everybody does, not just something we do,” Kleewein said. “The more people that adopt it the better, the faster the technology is going to develop, the better it’s going to be for us, for our customers, for everybody.”

The successful testing demonstrates microfluidics has moved beyond theoretical promise into functional reality, ready for deployment as AI computing demands continue their exponential growth trajectory.

If you are interested in this topic, we suggest you check our articles:

- What is AI Infrastructure? A Detailed Guide on Key Components

- 10 Real Life Examples of Sustainable AI in Action

- AI-Powered Supercomputers: Advancing Technology and Innovation

Sources: Microsoft, Microsoft Azure

Written by Alius Noreika