Claude’s compressed token system shortens input text by removing redundant tokens while preserving essential meaning. This leads to faster responses, lower computational costs, and better overall performance in tasks involving long inputs.

The Cost of Words

Every word you type into a large language model like Claude is broken down into tokens—the building blocks of machine understanding. Depending on the complexity of the language, even a short sentence can produce several tokens. As models process thousands or even millions of tokens daily, both speed and cost become significant concerns.

Prompt compression is a growing area of interest for researchers and developers alike. It promises a way to streamline input by removing extraneous data while keeping the parts that matter. For enterprise users, it means trimming unnecessary context. For casual users, it could mean quicker and more responsive AI interactions.

Why Compress at All?

The need to compress tokens stems from the limitations of current large language models. Even with expanded context windows—Claude 3.5 Sonnet, for instance, can handle over 200,000 tokens—the computational burden grows with prompt length. More tokens mean more processing time and, for API users, more money spent per call.

But there’s also a quality issue. Longer prompts don’t always improve results. In some cases, excessive context can dilute relevance or confuse the model. Compression solves this by making inputs more focused, allowing models to extract insights without wading through excess.

Task-Aware vs. Task-Agnostic Compression

Not all compression techniques are created equal. Researchers generally classify them into two groups: task-aware and task-agnostic.

Task-aware compression methods adjust input based on the goal. For example, if the prompt is a question, these methods analyze which parts of the input are most relevant to that query. LongLLMLingua, a popular task-aware model, uses a multi-step approach to identify high-entropy (i.e., informative) tokens and remove what’s not needed. While effective, task-aware models are less flexible—they’re tuned for specific tasks and may not perform well outside those bounds.

Task-agnostic compression, by contrast, works across use cases. These methods don’t require knowledge of the model’s task. They evaluate token importance purely based on their informational value within the prompt. This flexibility makes task-agnostic approaches ideal for general-use models like Claude, which often handle a wide range of topics in a single session.

Image source: TechTalks

LLMLingua-2 and the Claude Ecosystem

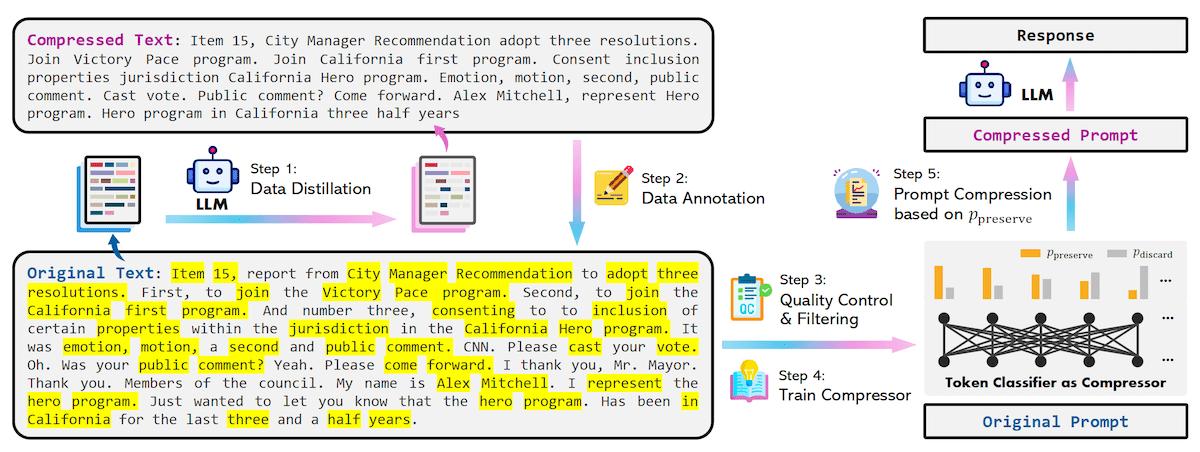

Among the more promising developments in token compression is LLMLingua-2, a task-agnostic method developed by researchers at Tsinghua University and Microsoft. Unlike its predecessors, LLMLingua-2 doesn’t rely on entropy calculations alone. Instead, it frames compression as a classification problem: Should this token be kept or discarded?

Using a lightweight, bidirectional transformer (as opposed to the unidirectional models used in many LLMs), LLMLingua-2 is trained on token-labeled datasets generated by GPT-4. The result is a model that can accurately preserve essential meaning while removing up to 80% of the original text—without losing coherence.

This is where Claude benefits. As a commercially deployed LLM with generous context limits, Claude can handle expansive prompts. But for clients who rely on repeated queries or long-form interactions, compression helps reduce latency, lower GPU memory use, and cut costs. Claude-compatible workflows that integrate LLMLingua-2—or similar techniques—can maintain performance while making the model more accessible and sustainable.

Real-World Impact and Tradeoffs

The use of LLMLingua-2 and similar tools has yielded promising results. Compression rates between 2x and 5x are common. Inference times drop by as much as 70%. In some cases, compressed prompts even perform better than their longer counterparts, especially when the target model struggles with excessive context.

However, the tradeoff is nuance. Task-aware models still outperform task-agnostic ones on specialized tasks where the question provides strong clues about which context matters. That’s a reminder: compression isn’t just about shortening input—it’s about making strategic choices.

The Bottom Line

Claude’s compressed token system reflects a broader shift in how we think about interacting with language models. It’s no longer about feeding the model as much information as possible—it’s about feeding it the right information, in the most efficient way.

Whether you’re building AI tools for research, customer support, or creative writing, understanding how compression works—and when to use it—can help you get more out of your interactions with Claude, without overspending or overloading the system.

As LLMs continue to expand in scope, compressed tokens may be the key to keeping their responses lean, relevant, and practical.

Source: TechTalks

You might also like:

Writing Better Prompts for ChatGPT and Other AI Tools: A Key Guide