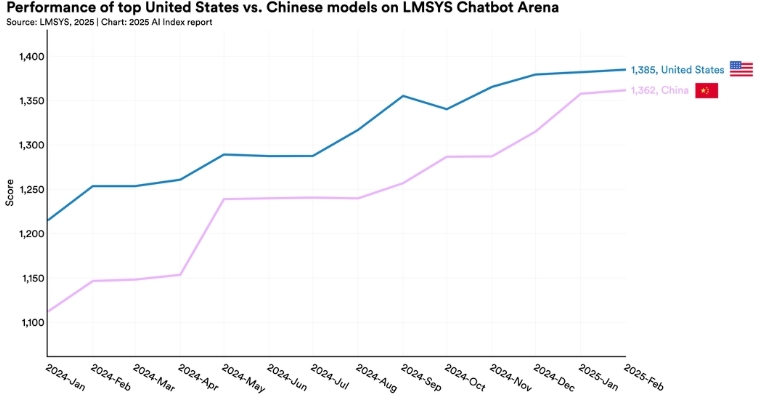

AI performance soars in 2025 with compute scaling 4.4x yearly, LLM parameters doubling annually, and real-world capabilities outpacing traditional benchmarks.

The artificial intelligence sector in 2025 has witnessed unprecedented acceleration, drastically altering the ways we measure and understand AI capabilities. While traditional benchmarks struggle to capture real-world utility, breakthrough performance metrics demonstrate that AI systems are evolving far faster than anticipated—with transformative implications for the remainder of this pivotal year.

The Exponential Scaling Revolution Behind Modern AI

The foundation of 2025’s AI breakthroughs lies in three interconnected scaling dimensions that have reached critical mass. Since 2010, the computational resources used to train AI models have doubled approximately every six months, creating a 4.4x yearly growth rate that dwarfs previous technological advances. This represents a dramatic acceleration from the 1950-2010 period, when compute doubled roughly every two years.

Training data has experienced equally explosive growth, with datasets tripling in size annually since 2010. GPT-4’s training corpus of 13 trillion tokens—equivalent to over 2,000 times the entire English Wikipedia—exemplifies this data abundance. Meanwhile, model parameters have doubled yearly, culminating in systems like the QMoE model with 1.6 trillion parameters.

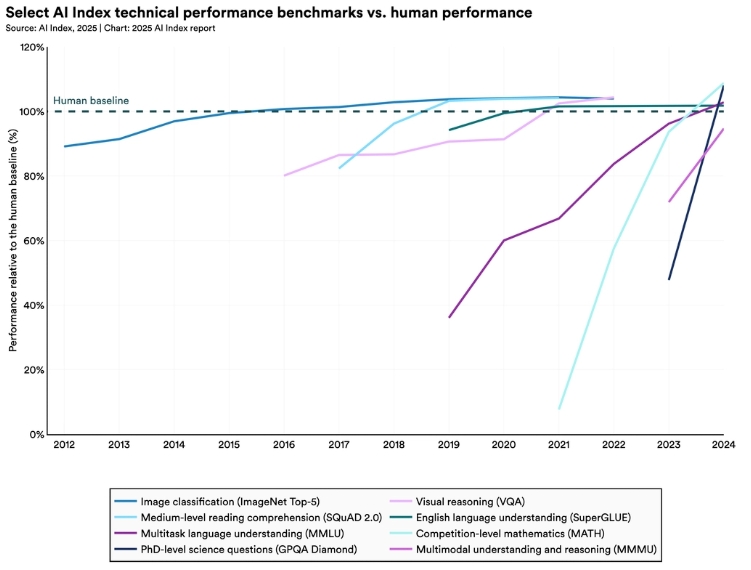

This scaling trifecta has produced capabilities that were theoretical just years ago. State-of-the-art systems that once struggled with basic counting now solve complex mathematical problems, generate realistic multimedia content, and engage in sophisticated academic discussions. The transition isn’t gradual—it manifests as sudden capability leaps when models reach specific size thresholds.

Real-World AI Capabilities vs. Academic Benchmarks

Perhaps the most striking revelation of 2025 is the profound disconnect between how AI is actually used and how it’s typically evaluated. Analysis of over four million real-world AI prompts reveals six core capabilities that dominate practical usage: Technical Assistance (65.1%), Reviewing Work (58.9%), Generation (25.5%), Information Retrieval (16.6%), Summarization (16.6%), and Data Structuring (4.0%).

Among 88% of AI users who are non-technical employees, the focus centers on collaborative tasks like writing assistance, document review, and workflow optimization—not the abstract problem-solving scenarios that dominate academic benchmarks. Current evaluation frameworks like MMLU and AIME, while measuring cognitive performance, fail to capture the conversational, iterative nature of human-AI collaboration.

For Technical Assistance, WebDev Arena emerges as the most realistic benchmark, using open-ended prompts that mirror actual help requests rather than narrowly defined Python functions. In Information Retrieval, SimpleQA provides more coherent fact-based interactions compared to rigid multiple-choice formats. However, critical capabilities like Reviewing Work and Data Structuring lack dedicated benchmarks entirely, despite their prevalence in real-world applications.

Performance Leadership in 2025’s Benchmark Landscape

When evaluated against capability-aligned metrics, Google’s Gemini 2.5 demonstrates remarkable versatility, ranking first in Summarization (89.1%), Generation (Elo score of 1458), and Technical Assistance (Elo score of 1420). Anthropic’s Claude secures second place in both Summarization (79.4%) and Technical Assistance (Elo score of 1357), highlighting the competitive intensity among leading models.

The research community has produced significant advances throughout 2025, with papers like “Large Language Models Pass the Turing Test” showing GPT-4.5 achieving 73% human identification rates. Meanwhile, specialized systems like SWE-Lancer reveal current limitations—even top models succeed only 26.2% of the time on real freelance coding tasks, emphasizing the gap between benchmarks and applied performance.

The Rise of Agentic AI and Autonomous Systems

The year 2025 already marks a fundamental shift toward agentic AI—systems capable of autonomous planning, reasoning, and action execution. Unlike traditional AI assistants requiring constant prompts, these agents break down complex tasks into manageable steps and execute them independently. IBM’s survey of 1,000 developers found 99% are exploring or developing AI agents, signaling industry-wide momentum.

However, experts remain divided on readiness. While underlying models possess sufficient capabilities—better reasoning, expanded context windows, and improved function calling—most organizations lack agent-ready infrastructure. The challenge isn’t model performance but enterprise API exposure and governance frameworks necessary for safe autonomous operation.

Autonomous agents represent a change of concept toward “experiential learning,” where AI systems learn through environmental interaction rather than static human data. This approach enables continuous adaptation and skill development, potentially driving the emergence of more general AI capabilities.

Efficiency Gains Outpace Traditional Scaling Advantages

The competitive dynamics of 2025 reveal that speed matters more than scale, fundamentally altering traditional business advantages. AI-native organizations are achieving performance levels previously reserved for large incumbents through agentic approaches and efficient model deployment.

Smaller, more efficient models like TinyLlama (1.1B parameters) and Mixtral 8x7B demonstrate that performance doesn’t require massive size. These compact systems can operate with just 8GB of memory, making advanced AI accessible to mobile applications and resource-constrained environments.

Companies implementing AI solutions are regularly achieving 30% productivity improvements, with some organizations reporting human-AI collaboration productivity boosts of 50%. This represents a fundamental shift in workforce capability multiplication, where each employee can access specialized AI agent teams across multiple disciplines.

Multimodal Capabilities and Real-Time Integration

The evolution beyond text-only systems has accelerated dramatically in 2025. Models like GPT-4o, Gemini 2.0, and Claude 3.5 Sonnet now process text, images, audio, and video in real-time, enabling new applications in creative tools, accessibility, and customer service.

Real-time fact-checking and external data access are becoming standard capabilities. Microsoft Copilot’s internet integration and models’ ability to provide citations by default address hallucination concerns while improving transparency and accuracy.

The emergence of synthetic training data represents another breakthrough, with Google’s self-improving models generating their own questions and answers to enhance performance. This technique reduces data collection costs while improving specialized domain performance.

Security, Governance, and Responsible AI Development

As AI systems gain autonomy, governance and security considerations have intensified. The scale of potential risks increases dramatically—while humans are limited by time and cognitive capacity, AI agents can execute actions rapidly and at scale, potentially causing cascading failures without proper oversight.

PwC’s 2024 Responsible AI Survey found that only 11% of executives have fully implemented fundamental responsible AI capabilities. Effective governance must span risk management, audit controls, security, data governance, privacy, bias mitigation, and model performance monitoring.

Security risks identified by OWASP’s updated Top 10 for LLMs include system prompt leakage, excessive memory usage, and malicious prompt injection. Developers are implementing safeguards like sandboxed environments, output filters, and red teaming exercises to address these vulnerabilities.

Economic Impact and Market Transformation

The economic implications of 2025’s AI advances are staggering. Goldman Sachs estimates generative AI could lift global GDP by 7% over the next decade, while the LLM market is projected to grow from $6.4 billion in 2024 to $36.1 billion by 2030.

Historical precedent suggests transformative potential. During the internet-fueled productivity boom ending in 2005, US labor productivity doubled to 2.8% annually. Current AI developments show similar—if not greater—potential to drive the next technology-powered economic expansion.

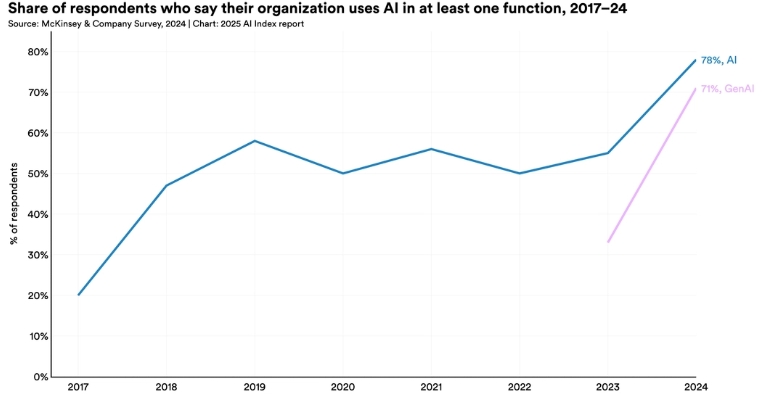

In PwC’s 28th Annual Global CEO Survey, 56% of CEOs report GenAI efficiency improvements in employee time usage, while 32% cite increased revenue and 34% report improved profitability. These early returns suggest much greater rewards as AI integration deepens.

The Path Forward: Speed, Innovation, and Human-AI Collaboration

The scaling trends that powered 2025’s breakthroughs show no signs of slowing. Hardware improvements continue driving down costs while increasing performance, with GPU computing speed doubling every 2.5 years per dollar spent. Organizations are leveraging computational resources not just in training but also during inference, as demonstrated by OpenAI’s o1 model.

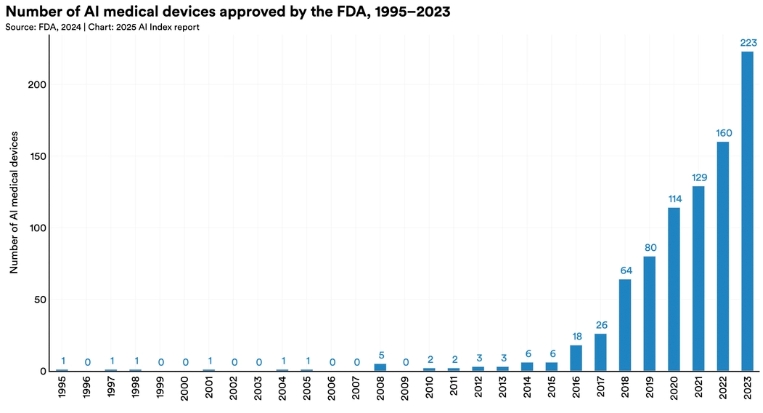

Domain-specific models are emerging as a key trend, with specialized systems like BloombergGPT for finance and Med-PaLM for healthcare delivering superior accuracy through deep contextual understanding. This represents a shift from one-size-fits-all toward targeted, industry-specific solutions.

The future workplace will be defined by human-AI collaboration rather than replacement. While AI handles routine tasks, humans remain essential for strategic direction, creative problem-solving, and ethical oversight. The most successful implementations will be those that augment human capabilities rather than attempt to supplant them entirely.

As 2025 progresses, the AI landscape continues evolving at unprecedented speed. The benchmarks that matter most are those reflecting real-world utility—measuring not just what AI can do, but how effectively it enhances human productivity and creativity. With more than half the year remaining, the trajectory suggests even more dramatic breakthroughs ahead, fundamentally reshaping how we work, create, and solve complex challenges.

If you are interested in this topic, we suggest you check our articles:

- Which LLM is the Best for Answering User Queries?

- Claude 3.5 Sonnet vs GPT-4o: The Ultimate Comparison

- OpenAI Unveils o3-pro: Advanced Reasoning Model Surpasses Competition in Benchmark Tests

Sources: Our World in Data, arXiv, IBM, Turing, PWC, HAI Stanford

Written by Alius Noreika