OpenAI has unveiled its latest reasoning models—o3 and o4-mini—designed specifically to excel at complex coding and mathematical challenges. These models represent a substantial advancement in AI problem-solving abilities, though their debut hasn’t been without controversy around benchmark testing and transparency.

The Promise of Enhanced Reasoning Capabilities

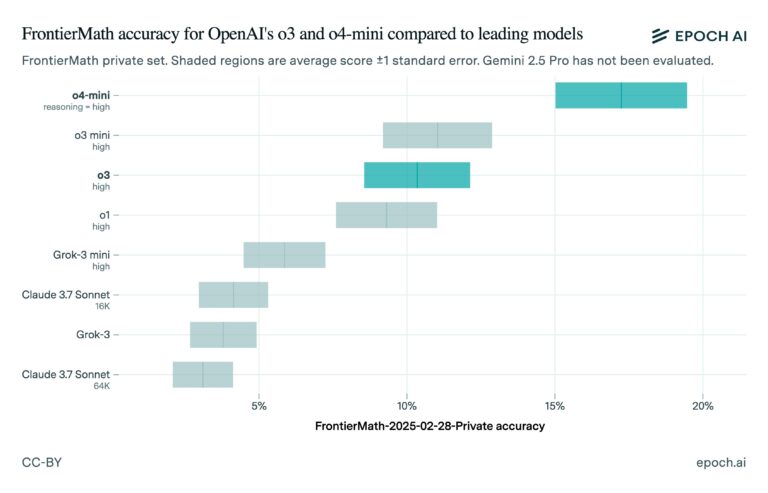

OpenAI’s new reasoning models mark a departure from previous AI systems by demonstrating unprecedented performance on challenging mathematical problems. During the initial December unveiling, OpenAI’s chief research officer Mark Chen highlighted that the o3 model could correctly solve over 25% of problems on FrontierMath—a notoriously difficult benchmark of complex mathematical challenges. This performance dramatically outpaced competing models, which struggled to reach even 2% accuracy on the same benchmark.

“Today, all offerings out there have less than 2% [on FrontierMath],” Chen stated during the announcement livestream. “We’re seeing [internally], with o3 in aggressive test-time compute settings, we’re able to get over 25%.”

Reality Check: Independent Testing Results

However, independent verification has painted a slightly different picture of o3’s capabilities. Epoch AI, the research institute responsible for creating the FrontierMath benchmark, conducted its own tests on the publicly released version of o3. Their results, published in late February, showed the model achieving approximately 10% accuracy—significantly lower than OpenAI’s originally touted 25%, though still vastly superior to competing systems.

Comparison of the reasoning capabilities of different large language models (LLMs). Image credit: Epoch AI

This discrepancy between OpenAI’s internal testing and independent verification raises important questions about benchmark methodology and transparency in AI development. Several factors may explain the performance gap:

- The publicly released o3 differs from the version OpenAI tested internally

- Different computing resources were allocated during testing

- Testing was conducted on different versions of the FrontierMath benchmark

Epoch AI noted that “the difference between our results and OpenAI’s might be due to OpenAI evaluating with a more powerful internal scaffold, using more test-time [computing], or because those results were run on a different subset of FrontierMath.”

Product Optimization vs. Raw Performance

Adding context to the benchmark disparity, the ARC Prize Foundation confirmed that “the public o3 model is a different model […] tuned for chat/product use,” suggesting intentional trade-offs between raw performance and practical usability.

OpenAI’s technical staff member Wenda Zhou acknowledged these differences during a subsequent livestream, explaining that the production version of o3 was “more optimized for real-world use cases” and speed compared to the development version demonstrated in December.

“We’ve done [optimizations] to make the [model] more cost-efficient [and] more useful in general,” Zhou explained. “You won’t have to wait as long when you’re asking for an answer, which is a real thing with these [types of] models.”

This illuminates a crucial aspect of commercial AI development: the tension between achieving impressive benchmark scores and creating systems that function effectively in real-world applications, where factors like response time and resource efficiency matter significantly.

The Broader Model Ecosystem

While the benchmark discrepancy has drawn attention, it’s worth noting that OpenAI’s expanding model lineup demonstrates continuous progress. Both o3-mini-high and o4-mini already outperform the standard o3 on FrontierMath, showcasing rapid improvement cycles. Additionally, OpenAI plans to release o3-pro in the coming weeks, which promises even more advanced reasoning capabilities.

This progressive deployment strategy reflects the company’s commitment to iterative improvement while balancing performance with practical usability considerations.

Industry-Wide Benchmark Challenges

The situation with o3’s benchmark results is not occurring in isolation. The AI industry has witnessed multiple controversies around performance claims as companies compete for market position and investor attention.

Earlier this year, Epoch AI faced criticism for not disclosing its funding relationship with OpenAI until after the o3 announcement. Many academics who contributed to the FrontierMath benchmark were unaware of OpenAI’s involvement until it became public knowledge.

Similar controversies have affected other companies, with Elon Musk’s xAI facing accusations of publishing misleading benchmark charts for its Grok 3 model. Even Meta has recently admitted to publishing benchmark scores for a model version different from what was made available to developers.

Looking Forward: Balancing Claims and Reality

As AI reasoning capabilities continue to advance rapidly, the o3 benchmark situation highlights the increasing importance of standardized, independent testing methodologies. While internal testing provides valuable development feedback, third-party verification offers crucial validation that helps establish trust in a field where capabilities can sometimes be difficult to verify.

For users and developers interested in these reasoning models, the key takeaway is clear: while benchmark numbers provide useful comparison points, real-world performance and specific use case suitability often matter more than headline figures.

OpenAI’s reasoning models represent genuine advancement in AI problem-solving capabilities, even if the publicly available versions don’t fully match initial testing claims. As the technology continues to mature, both impressive benchmarks and practical usability will remain the key factors in evaluating these increasingly sophisticated AI systems.

If you are interested in this topic, we suggest you check our articles:

- Agentic AI: Everything You Need to Know

- AI Chatbot Assistant for Business – Friend or Foe?

- What Are the Top AI Predictions for 2025 According to Experts?

Sources: TechCrunch

Written by Alius Noreika